Blog

PFN members participated in NIPS’17 Adversarial Learning Competition, one of the additional events to the international conference on machine learning NIPS’17 held on Kaggle, and we came in fourth place in the competition. As a result, we were invited to make a presentation at NIPS’17 and also have written and published a paper explaining our method. In this article, I will describe the details of the competition as well as the approach we took to achieve the forth place.

What is Adversarial Example?

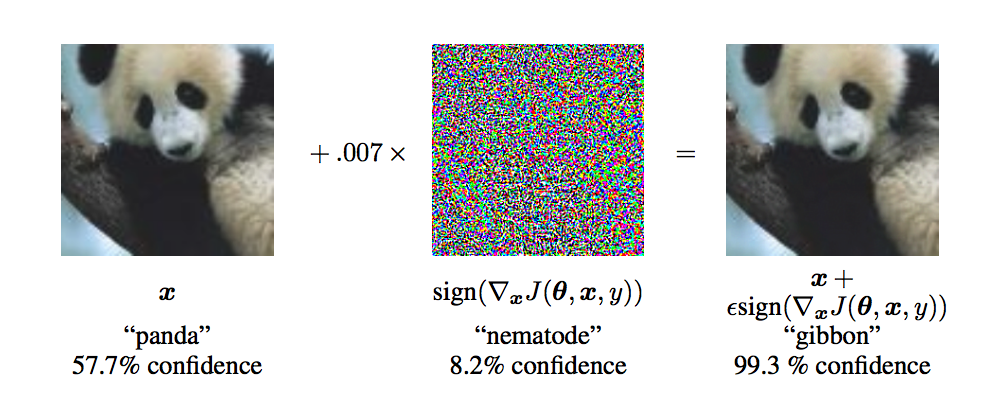

Adversarial example [1, 2, 3] is a very hot research topic and is said to be one of the most biggest challenges facing the practical applications of deep learning. Take image recognition for example. It is known that adversarial examples can cause CNN to recognize images incorrectly just by making small modifications to the original images that are too subtle for humans to notice.

The above are sample images of adversarial examples (ref. [2]). The left image is a picture of a panda that has been classified correctly as a panda by CNN. What we have in the middle is maliciously created noise. The right image looks the same as the left panda, but it contains the slight noise superimposed on it, causing CNN to classify it not as a panda but gibbon with a very high confidence level.

- [1] Christian Szegedy, Wojciech Zaremba, Ilya Sutskever, Joan Bruna, Dumitru Erhan, Ian J. Goodfellow, Rob Fergus: Intriguing properties of neural networks. CoRR abs/1312.6199 (2013)

- [2] Ian J. Goodfellow, Jonathon Shlens, Christian Szegedy:Explaining and Harnessing Adversarial Examples. CoRR abs/1412.6572 (2014).

NIPS’17 Adversarial Learning Competition

NIPS’17 Adversarial Learning Competition we took part in was a competition related to adversarial examples as the name suggests. I will explain two types of competition events: Attack and Defense tracks.

Attack Track

You must submit a program that adds noise to input images with malicious intent to convert them to adversarial examples. You will earn points depending on how well the adversarial images generated by your algorithm can fool image classifiers submitted in the defense track by other competitors. To be specific, your score will be the average rate of misclassifications made by each submitted defense classifier. The goal of the attack track is to develop a method for crafting formidable adversarial examples.

Defense Track

You must submit a program that returns a classification result for each input image. Your score will be the average of accuracy in classifying all adversarial images generated by each adversarial example generator submitted in the attack track by other teams. The goal of defense track is to build a robust image classifier that is hard to fool.

Rules in Detail

Your programs will have to process multiple images. Adversarial programs in the attack track will only be allowed to generate noise up to the parameter ε, which is given when they are run. Specifically, attacks can change R, G, and B values of each pixel on each image only up to ε. In other words, L∞ norm of the noise needs to be equal to or less than ε. The attack track is divided into non-targeted and targeted subsections, and we participated in the non-targeted competition, which is the focus of this article. For more details, please refer to the following official competition pages [4, 5, 6].

- [4] NIPS 2017: Non‒targeted Adversarial Attack | Kaggle : https://www.kaggle.com/c/nips‒2017‒non‒targeted‒adversarial‒attack

- [5] NIPS 2017: Targeted Adversarial Attack | Kaggle : https://www.kaggle.com/c/nips‒ 2017‒targeted‒adversarial‒attack

- [6] NIPS 2017: Defense Against Adversarial Attack | Kaggle : https://www.kaggle.com/c/nips‒2017‒defense‒against‒adversarial‒attack

Standard Approach for Creating Adversarial Examples

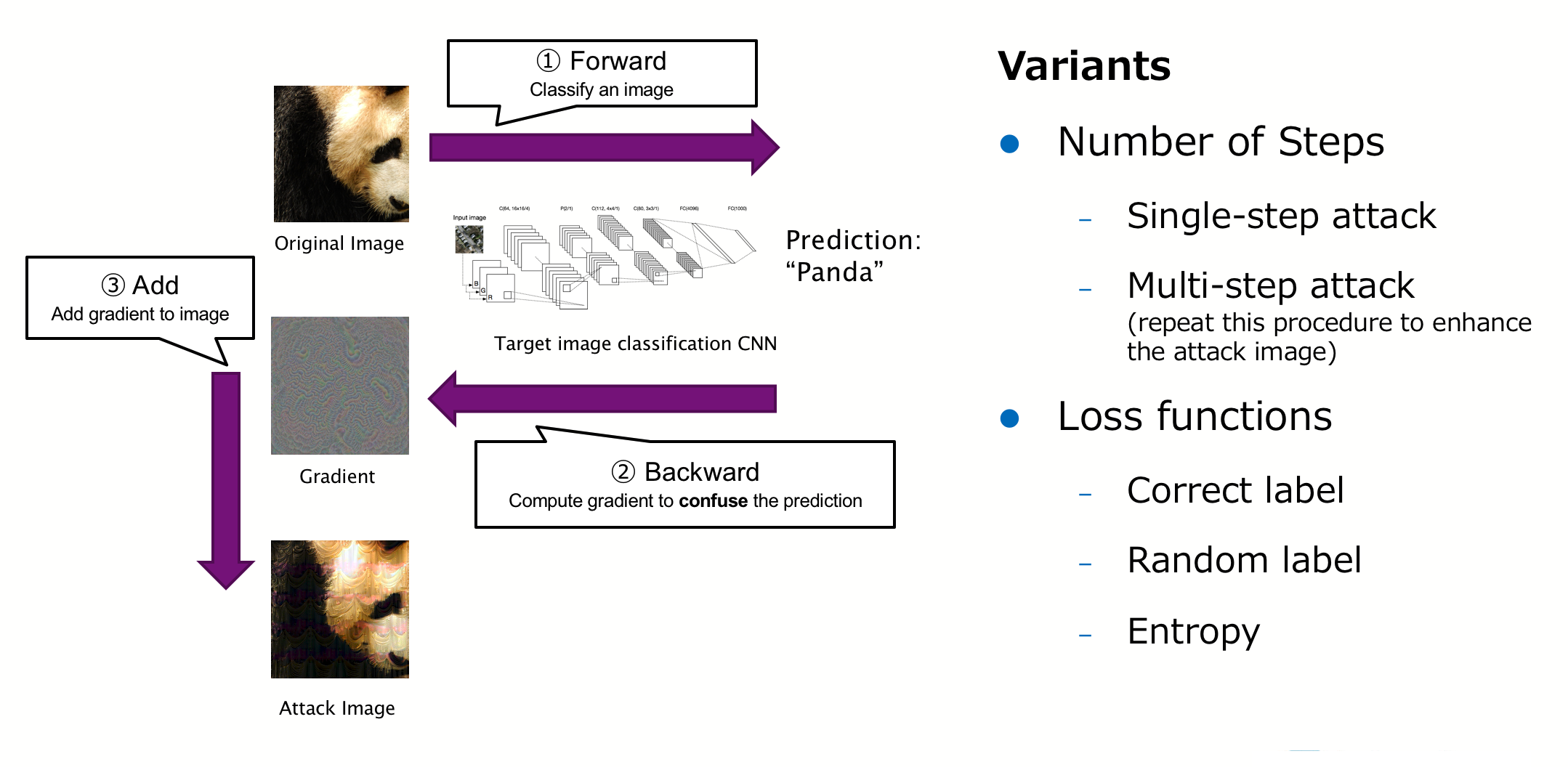

We competed in the attack track. First, I will describe standard methods for creating adversarial examples. Roughly speaking, the most popular FGSM (fast gradient sign method) [2] and almost all the other existing methods take the following three steps:

- Classify the subject image by an image classifier

- Use backpropagation though to the image to calculate a gradient

- Add noise to the image using the calculated gradient

Methods for crafting strong adversarial examples have been developed by exercising ingenuity in deciding whether these steps should be carried out only once or repeated, how the loss function used in backpropagation should be defined, how the gradient should be used to update the image, among other factors. Similarly, most of the teams seemed to have used this kind of approach to build their attacks in the competition.

Our Method

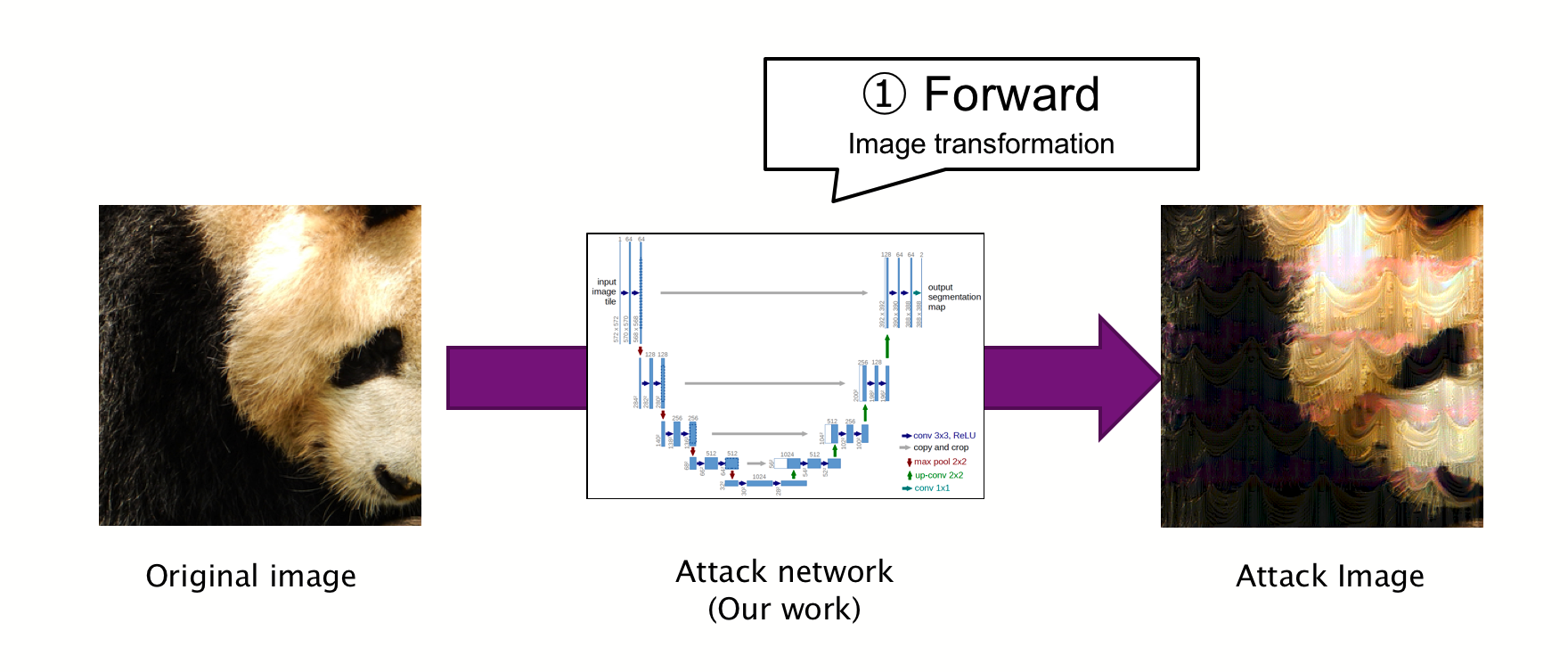

Our approach was to create a neural network that produces adversarial examples directly, which differs greatly from the current major approach described above.

The process to craft an attack image is simple and all you need to do is just give an image to the neural network. It will then generate an output image, which is adversarial example in itself.

How We Trained the Attack Network

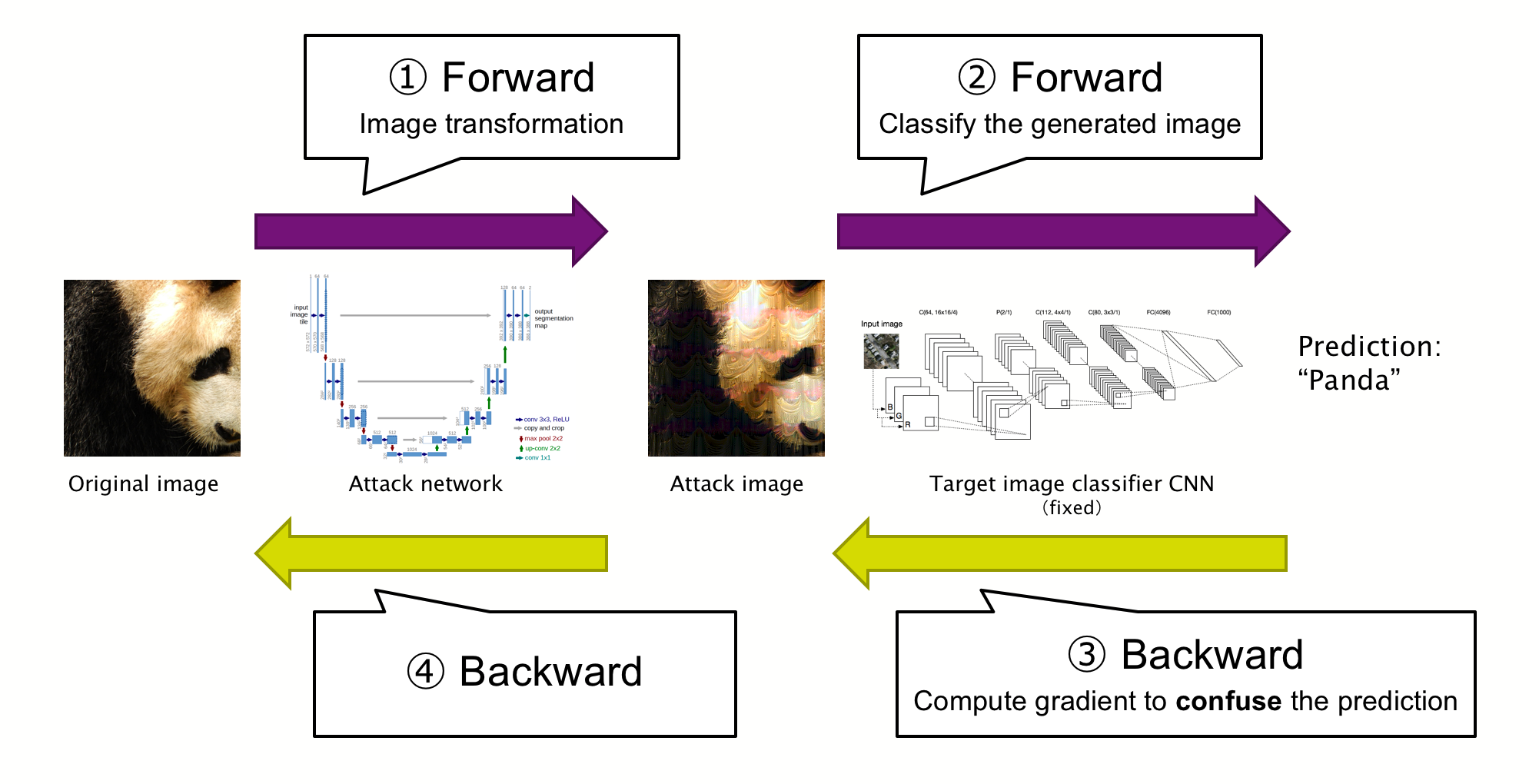

How we trained the attack networkThe essence of this approach was, of course, how we created the neural network. We henceforth call our neural network that generates adversarial examples “attack network.” We trained the attack network by repeating the iteration of the following steps:

- The attack network to generate an adversarial example

- Existing trained CNN to classify the generated adversarial example

- Use backpropagation on the CNN to calculate a gradient of the adversarial example

- Further backpropagation on the attack network to update the network with the gradient

We designed the architecture of the attack network to be fully convolutional. A similar approach has been proposed in the following paper [7] for your reference.

- [7] Shumeet Baluja, Ian Fischer. Adversarial Transformation Networks: Learning to Generate Adversarial Examples. CoRR, abs/1703.09387, 2017.

Techniques to Boost Attacks

We have developed such techniques as multi‒target training, multi‒task training, and gradient hint in order to generate more powerful adversarial examples by trying one way after another to devise the architecture of the attack network and the training method. Please refer to our paper for details.

Distributed Training on 128 GPUs Combining Data and Model Parallelism

In order to solve the issue that training takes a significant amount of time as well as to design the large-scale architecture of the attack network, we used ChainerMN [8] to train it in a distributed manner on 128 GPUs. After considering two factors in particular, which are the need to reduce the batch size due to GPU memory since the attack network is larger than the classifier CNN, and the fact that each worker uses a different classifier network in the aforementioned multi-target training, we have decided to use a combination of standard data parallel and the latest model parallel function of ChainerMN to achieve effective data parallelism.

- [8] Takuya Akiba, Keisuke Fukuda, Shuji Suzuki: ChainerMN: Scalable Distributed Deep Learning Framework. CoRR abs/1710.11351 (2017)

Generated Images

In our approach, not only the method we used but also generated adversarial examples are very unique.

Original images are in the left column, generated adversarial examples are in the middle, and generated noises are in the right column (i.e. the differences between the original images and adversarial examples). We can observe two distinguishing features from the above.

- Noise was generated to cancel the fine patterns such as the texture of the panda’s fur, making the image flat and featureless.

- Jigsaw puzzle-like patterns were added unevenly but effectively by using the original images wisely.

Because of these two features, many image classifiers seemed to classify these adversarial examples as jigsaw puzzles. It is interesting to note that we did not specifically train the attach network to generate these puzzle-like images. We trained it based on objective functions to craft images that could mislead image classifiers. Obviously, the attack network automatically learned that it was effective to generate such jigsaw puzzle-like images.

Results

Finally, we were in the fourth place among about 100 teams. I was personally disappointed by this result as we were aiming for the top place, we had the honor to give a talk at the NIPS’17 workshop since only the top four winners were invited to do so.

Offered by the organizers of the event, we have also co-authored a paper related to the competition with big names in machine learning such as Ian Goodfellow and Samy Bengio. It was a good experience to publish the paper with such great researchers [9]. We have also made the source code available on GitHub [10].

- [9] Alexey Kurakin, Ian Goodfellow, Samy Bengio, Yinpeng Dong, Fangzhou Liao, Ming Liang, Tianyu Pang, Jun Zhu, Xiaolin Hu, Cihang Xie, Jianyu Wang, Zhishuai Zhang, Zhou Ren, Alan Yuille, Sangxia Huang, Yao Zhao, Yuzhe Zhao, Zhonglin Han, Junjiajia Long, Yerkebulan Berdibekov, Takuya Akiba, Seiya Tokui, Motoki Abe. Adversarial Attacks and Defences Competition. CoRR, abs/1804.00097, 2018.

- [10] pfnet‒research/nips17‒adversarial‒attack: Submission to Kaggle NIPS’17 competition on adversarial examples (non‒targeted adversarial attack track) : https://github.com/pfnet‒research/nips17‒adversarial‒attack

While our team was ranked fourth, we had been attracting attention from other participants even before the competition ended due to the run time that was very different in nature from that of other teams. This is attributed to the completely different approach we took. The below table shows a list of top 15 teams with their scores and run time. As you can see, our team’s run time was an order of magnitude faster. This was because in our approach our attack only calculated forward, thus short calculation time, as opposed to repeating forward and backward calculations using gradients of images in almost all approaches taken by other teams.

In fact, according to a PageRank-style analysis conducted by one of the participants, our team got the highest score. This indicates our attack was especially effective against top defense teams. It must have been difficult to defend against our attack which was different in nature from other attacks. For your information, a paper describing the method used by the top team [11] has been accepted by the computer vision international conference CVPR’18 and is scheduled to be presented in the spotlight session.

- [11] Yinpeng Dong, Fangzhou Liao, Tianyu Pang, Xiaolin Hu, Jun Zhu:Yinpeng Dong, Fangzhou Liao, Tianyu Pang, Xiaolin Hu, Jun Zhu: Discovering Adversarial Examples with Momentum. CoRR abs/1710.06081 (2017)

Conclusions

Our efforts to participate in the competition started as part of our company’s 20% projects. Once things got going, we began to think we should concentrate our efforts and aim for the top place. After some coordination, our team got into full gear and began to spend almost all our work hours in this project toward the end. PFN has an atmosphere that encourages its members to participate in competitions such as this as other PFN teams have competed in Amazon Picking Challenges and IT Drug Discovery Contest, for example. I like taking part in this kind of competitions very much and will continue to be engaged in activities like this on a regular basis while wisely choosing competitions that have to do with challenges our company wants to tackle. Quite often, I find the skills honed through these competitions to be useful in handling tasks at critical moments of our company projects such as tuning accuracy or speed.

PFN is looking for engineers and researchers who are enthusiastic about working with us on this kind of activities.

Tag