Blog

Post-training completed for PLaMo-100B, a proprietary LLM with 100 billion parameters

Area

Tag

Kosuke Nakago

Engineer

[Note] This post was created by translating the Japanese post using PLaMo-100B-Instruct, followed by some minor human modification.

Preferred Elements (PFE), a subsidiary of Preferred Networks, has been working on the development of a Large Language Model (LLM) called “PLaMo-100B” with 100 billion parameters since February 2024. We completed the pre-training phase in May and have since been conducting post-training, which is the latter part of the training process. In this post, we will be reporting our efforts in the post-training phase.

PLaMo-100B-Instruct completed its post-training and achieved better performance than GPT-4 on the Japanese Jaster benchmark and also surpassed the performance of GPT-4 on the Rakuda Benchmark that tested Japanese-specific knowledge. For more information, please refer to the press release below.

The development of PLaMo-100B was supported by GENIAC, which aims to enhance Japan’s competitiveness in the development of generative AI foundation models. The development was carried out with the assistance of computational resources supported by the New Energy and Industrial Technology Development Organization (NEDO) under the “ポスト5G情報通信システム基盤強化研究開発事業/ポスト5G情報通信システムの開発”.

What is post-training?

The development of large language models (LLM) involves a process of post-training after pre-training, which aims to equip the models with the capabilities learned through massive amounts of text during the initial stage. Pre-training involves making a model learn the rules of grammar and the world’s knowledge by predicting the next token in text sequences. However, a model that has been pre-trained only cannot efficiently extract the learned knowledge in a way that corresponds to human intentions. Post-training solves this problem by teaching a model how to utilize the skills acquired during pre-training in a manner that can better be applied to real-world applications.

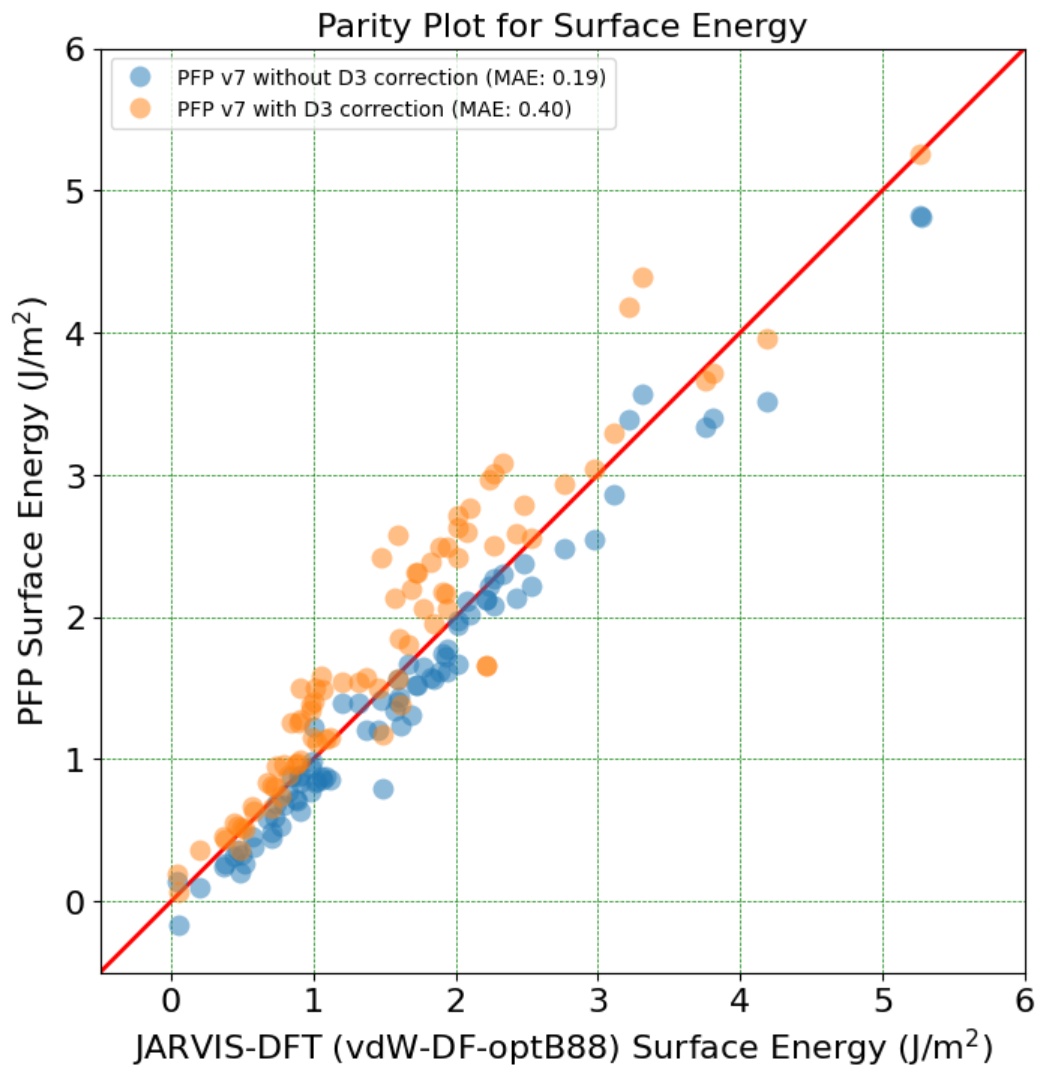

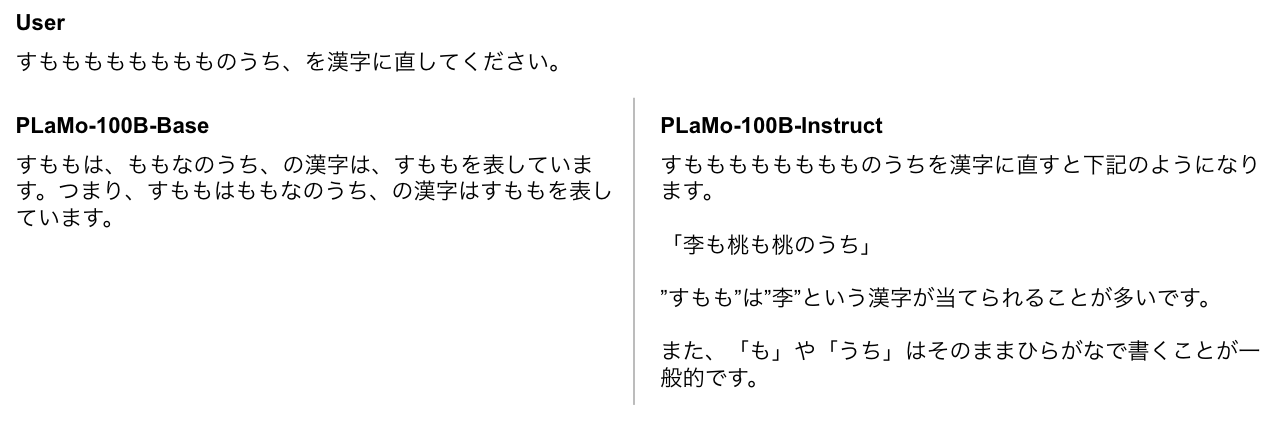

The PLaMo-100B-Base, which has not gone through post-training, contributes no proper response in response to the user’s query on how kanji characters would represent famous tongue-twisting phrases, whereas PLaMo-100B-Instruct, which has undergone the additional post-training, could receive and execute the request from users to provide a correct answer. See Figure 1.

Figure 1: Comparing outputs before and after post-training

Figure 1: Comparing outputs before and after post-training

Pre-training can be likened to the process of creating a rough gemstone, whereas post-training is analogous to refining and polishing it to make it more useful for specific purposes. The rough gemstone may not shine on its own, but through the process of post-training, it can be transformed into a valuable and practical tool.

We have conducted post-training of PLaMo-100B, developed entirely internally at PFE. We were able to gain expertise on the type of data that can be applied and how to apply it during post-training. We believe that having such control over the modeling process, learning process, and data is crucial for incorporating LLMs into various applications.

Training method

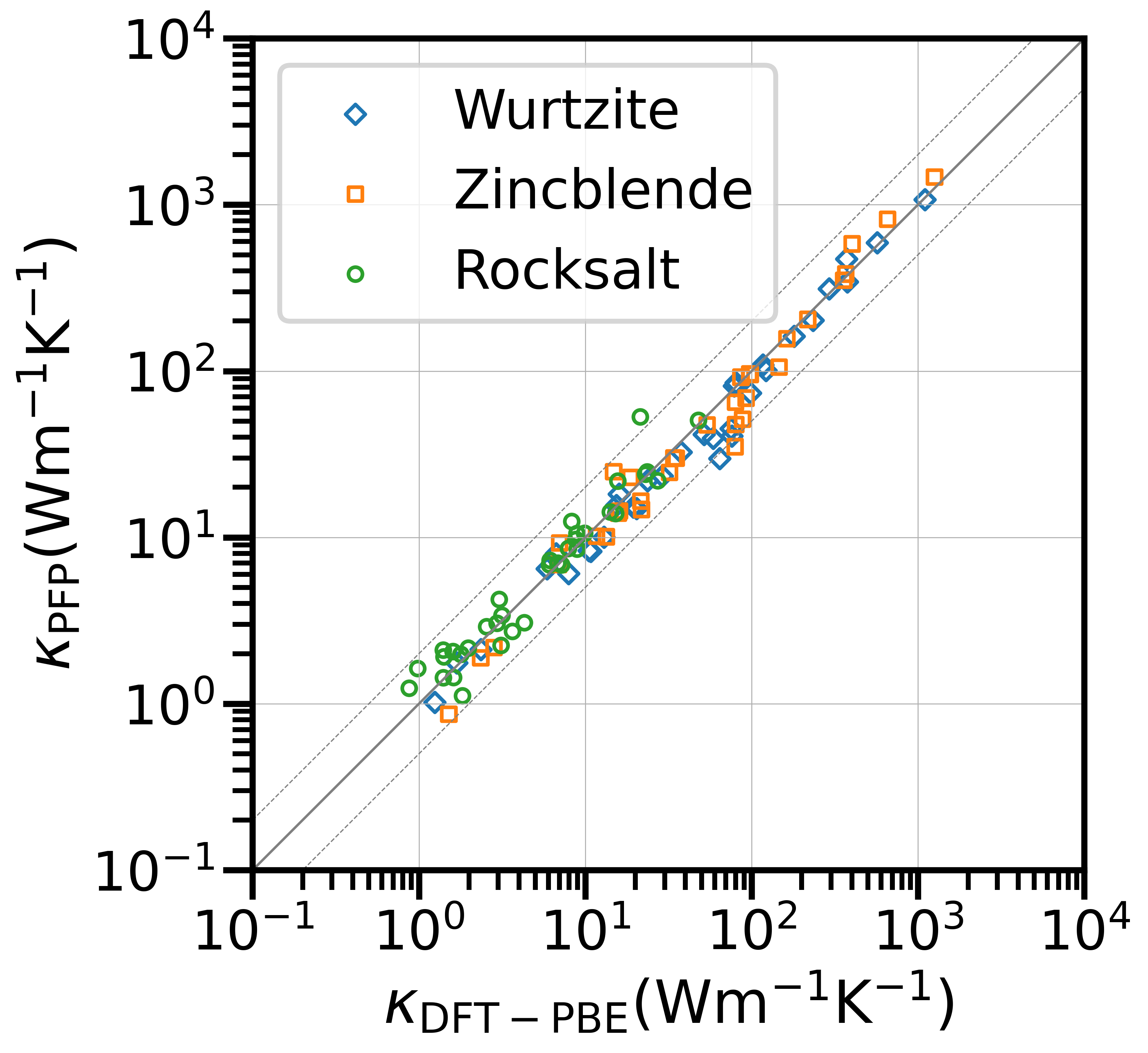

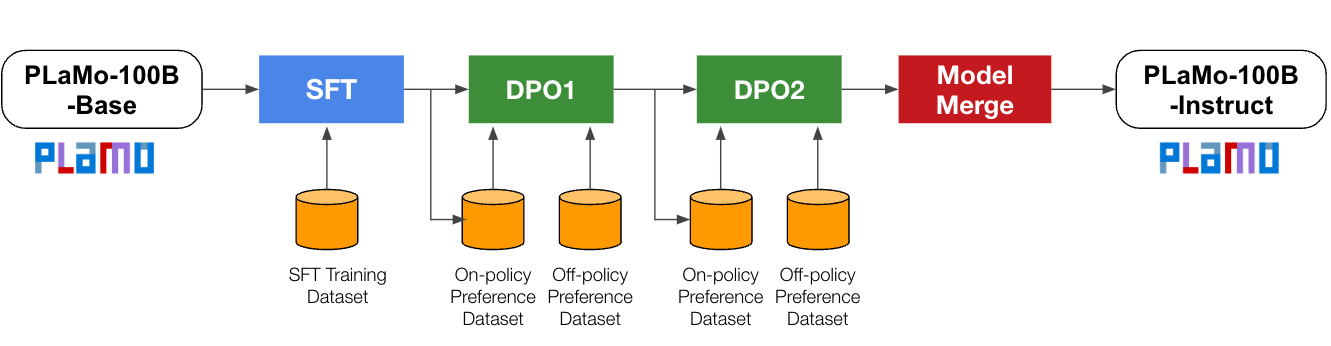

In this post-training, we introduce the Supervised Finetuning (SFT) and Direct Preference Optimization (DPO) algorithms. As described below, we utilized a learning pipeline of SFT -> Iterative DPO. We also adopted the Model Merging technique for further improvement in generalization performance after the learning process. A summary of the training pipeline is displayed in Figure 2.

Figure 2: Summary diagram of the training pipeline

Supervised Finetuning (SFT)

In Supervised Finetuning (SFT), the teacher-driven training is performed using a dataset consisting of the paired data of “question to the LLM” and “expected answer from the LLM.” In other words, this dataset contains a variety of tasks, where each pair is always formed with a question and the corresponding answer to the question as a role model as in a task of answering a mathematical problem—the pair includes the problem and its answer, or, like conversational chat, prompt by the user and the expected response. By using as the training dataset, as much diverse and high-quality data as possible, we hope for improvement of the downstream-tasks performance of the LLM.

Training in SFT is conducted using Next Token Prediction, similar to pre-training, but with a focus on fine-tuning the generation of the response portion. In order to achieve this, a technique may be employed where only the response portion of the input text is considered when calculating the loss, rather than the entire input. Although experimental results showed no significant performance difference between this approach and considering the entire input, it was decided to adopt this method in the current training to mitigate the risk of the model learning undesirable characteristics, such as aggressive expressions, that may be present in the questions.

In SFT, traditionally, various task training data is aggregated together and trained at once. However, some existing, previous studies, like Nemotron-4, have observed a conflict among multiple tasks when learning concurrently. Although they tried to alleviate the conflict with adjustments of the sample weighting ratio, achieving a certain level of harmony was difficult. In particular, such a conflict seems worse when handling the Coding tasks. As a solution, a two-stage SFT is proposed to divide it into the original specific tasks such as Coding and more general tasks, then conduct separate SFT for both.

In our experiment, a similar tendency occurred for mathematical questions as well, so we adopted their previous method of a two-stage SFT that separates mathematical questions (first-stage) and various other tasks (second-stage).

Direct Preference Optimization (DPO)

DPO is an algorithm proposed by Rafailov et. al (2024). It learns human preference from labeled data where a pair of responses to the same question is labeled as better (chosen) or worse (rejected). The model is encouraged to generate a more preferred response by using this preference information.

In learning DPO, existing datasets such as Anthropic/hh-rlhf and nvidia/HelpSteer2, which are labeled with responses from LLMs or human-written responses, are often used. However, it is also possible to generate data by having the model itself generate multiple response candidates and then labeling them. The former setting, where the model that generated the responses is different from the one being trained, is called off-policy, while the latter, where the model being trained generates the responses, is called on-policy.

On-policy training has been shown to be effective in improving learning efficiency, as it provides preference feedback on the types of responses that the model is more likely to generate (Tajwar et al., 2024). A middle ground approach, SPIN, has also been proposed, which generates a dataset by pairing the teacher responses in the SFT dataset with the model-generated responses, assuming that the teacher responses are more preferred.

When on-policy data is used for training, it is possible to iterate between data generation and DPO training (Iterative DPO), which has been found to be more effective than a single round of DPO (Xu et al., 2023, Dong et al., 2024).

We combine three different datasets for our two-stage DPO training after SFT: (1) a publicly available dataset, (2) a dataset generated by labeling the responses generated from a snapshot of PLaMo-100B, and (3) a dataset generated by SPIN, in order to take advantage of both the high-quality preference datasets that are publicly available and the benefits of Iterative DPO using on-policy datasets. The details of the data generation process are described in the data generation section.

Model Merge

Model merging is a technique that combines multiple models to improve performance. There are various methods for model merging. Llama-3.1 have reportedly used the average of multiple models. We used a simple model merging technique called SLERP, which calculates the midpoint between two models.

There were several final candidates for DPO results, depending on the combination of training data and other factors. By merging two of these models with distinct characteristics, we were able to create a model that incorporates the strengths of both models to some extent.

Data generation

In order to perform post-training, it is necessary to have a diverse and high-quality dataset that indicates what kind of responses are desirable for user questions. In the early days of post-training, studies like InstructGPT hired many annotators to create a dataset for defining how LLMs should respond to user questions. However, as LLM development has progressed, there have been efforts to have LLMs themselves construct post-training datasets, such as Constitutional AI and Self-Instruct.

Since manually creating datasets can be costly and may not provide a competitive advantage, and because there is a need for a technology infrastructure that can flexibly respond to changing post-training goals for each project or application, we decided to focus on developing a scalable data generation method in February, when the GENIAC project began.

In practice, we tried all of the following methods for post-training:

- Using public data

- Generating data programmatically

- Generating data using LLMs

In this project, we did not use any data that prohibits commercial use or output from proprietary models like GPT-4 or GPT-3.5, and also did not use the Jaster training data, as this is prohibited by the GENIAC requirements.

Using public data

There are high-quality post-training datasets available for commercial use, such as oasst2 or hh-rlhf in English, also ichikara-instruction (requires payment for commercial use) in Japanese. Also, the number of publicly accessible datasets is increasing day-by-day. During this project, we kept an eye on datasets made available during the project period and conducted experiments on various datasets.

Generating data programmatically

To solve mathematical problems accurately, we created templates for problem types that involve calculations and generated datasets by changing the numerical values. Our math dataset was generated manually without using machine learning. Although there is a limit to the number of problem templates that can be created by hand, and many data points would have only different numerical values, we decided it would be okay based on our previous learning experiments and the following considerations. When an LLM generates a calculation result, the distribution of tokens tends to become deterministic, which is as intended during the learning process. Additionally, the text other than the formulas themselves is likely to be in a fixed format. Even if the only difference between data points is the numerical values, there is some diversity in the results, such as whether carrying occurs in addition, whether the result of a fraction calculation can be simplified, or which variable to eliminate in simultaneous linear equations.

Existing examples of datasets not using machine learning include the AMPS pretraining corpus by Hendrycks et al., 2021 and the work by Saxton et al., 2019, but these datasets have artificial TeX expressions for formulas and answers are only numerical values, so there is room for improvement for post-training. We also generated our own math datasets for the purpose of increasing the amount of Japanese math data. We have used the math-related datasets for pre-training as well, but for post-training, we apply a different format, such as instruction-based responses, and combine different datasets with varying ratios, taking into account the characteristics of each dataset.

Generating data using LLMs

For the question-answering dataset, we generated it based on the Self-Instruct algorithm, but instead of directly using the algorithm for GPT, we devised a method to enable the generation of such data with smaller LLMs like PLaMo-13B. For instance, when attempting to generate a question sentence directly, it did not work well, so we added an extra step to first generate a short title.

During the development of PLaMo-100B, we also worked on translating the collected and generated datasets into Japanese. There are very limited options for post-training datasets available for commercial use in Japanese. It is difficult to obtain a sufficient amount and variety of data for training. Even when generating our own data, many open LLMs are developed with English in mind, making it challenging to generate high-quality Japanese responses compared to English. By using PLaMo-100B for translation during post-training, we were able to increase the amount of high-quality Japanese data, which led to performance improvements in Japanese generation tasks, such as Japanese MT-Bench.

PLaMo-100B snapshot for generating Preference data

We generated preference data during the post-training process of PLaMo-100B. Referring to the RLHF Workflow, we generated eight different responses for the same prompt using PLaMo-100B and evaluated their scores. The highest-scoring response was chosen as the chosen response, while the lowest-scoring one was rejected. We used open LLMs for scoring the responses and experimented with both LLM as a Judge format and Reward model format for scoring.

In this data generation process, only the prompt is required, and there is no need for a teacher response example. We can utilize datasets like chatbot_arena_conversations, which only contains user prompts in a commercially usable format. During the response generation stage, where LLM inference is required, we utilized vLLM for acceleration.

Evaluation Method and Evaluation Results

For evaluating the model, we used the g-leaderboard branch of the llm-leaderboard benchmark operated by Weights & Biases on the GENIAC 1.0, and measured Jaster, MMLU, and MT-Bench. Additionally, we also used in-house evaluation code to measure the Rakuda Benchmark.

Jaster

It measures the ability of LLMs to understand Japanese and is evaluated using the code from the llm-jp-eval repository. In the GENIAC project, it is evaluated on the g-leaderboard branch using a specific set of categories (NLI, QA, RC, MC, MR, FA, six categories in total). For more information about the meaning of each category and sample questions, please refer to the Nejumi LLM leaderboard Neo.

In this benchmark, the performance of LLMs is measured in both 4-shot and 0-shot settings during the question-answering task. In the 4-shot setting, examples of questions and answers are provided when asking questions, while in the 0-shot setting, no examples are given.

| Model Name | AVG | FA | MC | MR | NLI | QA | RC |

| GPT-4 (0125 Preview) | 0.7222 | 0.2546 | 0.96 | 0.97 | 0.772 | 0.5685 | 0.8084 |

| GPT 3.5 Turbo | 0.5668 | 0.1828 | 0.61 | 0.77 | 0.59 | 0.4294 | 0.8183 |

| Swallow-70b-instruct-hf | 0.5755 | 0.1752 | 0.59 | 0.71 | 0.642 | 0.48 | 0.8559 |

| PLaMo-100B-Base | 0.5415 | 0.1851 | 0.83 | 0.28 | 0.682 | 0.4256 | 0.8463 |

| PLaMo-100B-Instruct | 0.7378 | 0.5791 | 0.95 | 0.78 | 0.838 | 0.3939 | 0.8858 |

Table 1: Evaluation Results for Jaster 0-shot

Note: PLaMo-100B-Instruct evaluations were conducted in-house, while other models’ evaluations were based on the results provided by Weights & Biases.

| Model Name | AVG | FA | MC | MR | NLI | QA | RC |

| GPT-4 (0125 Preview) | 0.7724 | 0.4052 | 0.95 | 0.98 | 0.806 | 0.6225 | 0.8707 |

| GPT 3.5 Turbo | 0.6564 | 0.355 | 0.9 | 0.84 | 0.544 | 0.4226 | 0.8765 |

| Swallow-70b-instruct-hf | 0.6755 | 0.3653 | 0.9 | 0.77 | 0.506 | 0.6339 | 0.8779 |

| PLaMo-100B-Base | 0.6786 | 0.3045 | 0.93 | 0.61 | 0.71 | 0.6335 | 0.8839 |

| PLaMo-100B-Instruct | 0.7750 | 0.5917 | 0.96 | 0.80 | 0.856 | 0.5610 | 0.8812 |

Table 2: Evaluation Results for Jaster 4-shot

Note: PLaMo-100B-Instruct evaluations were conducted in-house, while other models’ evaluations were based on the results provided by Weights & Biases.

As shown in Tables 1 and 2, the PLaMo-100B-Instruct model, which is obtained after post-training, significantly improved its performance compared to the base model, surpassing GPT-4’s average score. Although the Jaster training dataset was not used in this experiment, the FLAN paper and our recent technical blog post “Evaluation of PFE’s Developed LLM, PLaMo-100B, on Financial Benchmarks and Analysis of Results” explain that the model learned how to utilize the knowledge gained during pre-training by learning how to answer various question formats during post-training. This makes the model to perform better. The results confirm that the PLaMo-100B model, which is trained on a higher fraction of Japanese data, has a strong foundation in Japanese language understanding.

The only category where the model’s performance lagged behind GPT-4 was the MR (Mathematical Reasoning) category. We constructed a large-scale math dataset as explained in previous section and performed extensive SFT. However, achieving near-perfect accuracy in this category requires a high level of generalization in mathematical problem-solving abilities, which might not be fully developed during pre-training without sufficient exposure to a wide variety of mathematical examples.

MT-Bench

MT-Bench is a benchmark for evaluating the conversational response ability of LLMs, which measures the quality of responses for questions in eight categories: Writing, Roleplay, Extraction, Reasoning, Math, Coding, STEM, and Humanities. Since the responses are free-form conversations, it is not possible to score them using rule-based methods. Instead, the LLM-as-a-Judge method is used, where LLMs like GPT-4 are used as evaluators to assign scores. The original benchmark is in English, but Stability AI has created a Japanese version, which is publicly available as Japanese MT-Bench.

In the GENIAC project, we have measured both English and Japanese versions of MT-Bench scores. However we report only Japanese MT-Bench scores on Table 3.

| Model Name | AVG | coding | extraction | humanities | math | reasoning | roleplay | stem | writing |

| GPT-4 (0125 Preview) | 8.925 | 9.1 | 8.5 | 8.55 | 8.65 | 8.25 | 9 | 9.8 | 9.55 |

| GPT 3.5 Turbo | 8 | 8.4 | 8.65 | 9.75 | 5.15 | 6.35 | 8.75 | 8.5 | 8.45 |

| Mixtral-8x7B-Instruct-v0.1 | 6.975 | 6.65 | 8.15 | 8.5 | 5 | 6.05 | 6.4 | 8.4 | 6.65 |

| Swallow-70b-instruct-hf | 4.381 | 3.35 | 5.7 | 4.8 | 1.45 | 3.75 | 5.85 | 5.85 | 4.3 |

| PLaMo-100B-Base | 5.469 | 3.85 | 4.65 | 7.6 | 3.95 | 2.55 | 8 | 7.7 | 5.45 |

| PLaMo-100B-Instruct | 7.781 | 4.95 | 7.7 | 9.75 | 4.9 | 7.35 | 9.25 | 9.1 | 9.25 |

Table 3: Evaluation Results for Japanese MT-Bench

Note: PLaMo-100B-Instruct evaluations were conducted in-house, while other models’ evaluations were based on the results provided by Weights & Biases.

Based on the evaluation, PLaMo-100B-Instruct managed to score an average of 7.781, coming close to GPT-3.5 in terms of score. Compared to the baseline model’s score of 5.469, there is a significant improvement, demonstrating that the post-training process successfully enhanced the conversational response capabilities as intended.

Analyzing by category, we found that the model particularly excelled in the humanities, STEM, writing, and roleplay categories, with notable performance in responses requiring creative and open-ended dialogue. Table 4 illustrates an example response from the humanities category.

| Turn | User | PLaMo-100B-Instruct | Score |

| 1 |

日本でビジネスを行う際のビジネスマナーについて説明してください。 |

日本でビジネスを行う際には、日本独自のビジネスマナーが存在します。以下に、その一部をご紹介します。 ・名刺交換 日本では、名刺交換は非常に重要なビジネスマナーの一つです。名刺交換の際には、名刺を両手で受け取り、相手の目を見て挨拶を交わします。また、名刺を受け取る際には「頂戴いたします」と言い、相手の名刺を丁寧に扱います。 ・挨拶 日本では、挨拶を非常に重視します。ビジネスの場では、「おはようございます」「こんにちは」「こんばんは」などの挨拶を、相手に対して丁寧に行うことが求められます。また、挨拶の際にはお辞儀をすることが一般的です。お辞儀の角度やタイミングにもマナーがありますので、注意が必要です。 ・時間厳守 日本では、時間を守ることが非常に重要視されます。ビジネスの場では、約束の時間に遅れないようにすることが求められます。やむを得ず遅刻をする場合には、事前に連絡を入れることがマナーです。 ・言葉遣い 日本では、敬語を使うことが一般的です。相手に対して失礼のないように、適切な敬語を使うことが求められます。また、ビジネスの場では、ネガティブな言葉や否定的な表現を避けることが望ましいとされています。 ・服装 日本では、ビジネスの場での服装にもマナーがあります。男性の場合はスーツが一般的で、女性の場合はスーツやジャケット、ブラウスなどのフォーマルな服装が求められます。また、ビジネスの場では、清潔感のある服装を心がけることが大切です。 以上のように、日本でビジネスを行う際には、日本独自のビジネスマナーを理解し、適切に対応することが求められます。これらのマナーを守ることで、相手に対して敬意を表し、ビジネスを円滑に進めることができます。 |

10 |

| 2 |

日本のカーディテーリング事業の新入社員向けトレーニングのビデオスクリプトを作成してください。上記のエチケットの規範を強調してください。 |

[オープニングシーン: 清潔でプロフェッショナルな外観のカーディテイリングショップ。トレーナーがカメラに向かって微笑みながら挨拶をする。] トレーナー: 「こんにちは、そしてようこそ私たちのカーディテイリングビジネスへ!私は本日のトレーナーです。今日から数日間、皆さんに基本的なビジネスマナーやエチケットについての研修を行います。これらのスキルは、お客様や同僚との良好な関係を築くために非常に重要です。では、さっそく始めましょう。」 [シーン1: 名刺交換] トレーナー: 「日本では、名刺交換は非常に重要なビジネスマナーです。名刺を交換する際には、必ず立って、相手の目を見て、両手で名刺を受け取り、渡します。また、名刺を受け取る際には、『名刺を頂戴いたします』と言い、相手の名刺を丁寧に扱います。」 [シーン2: 挨拶] トレーナー: 「挨拶も非常に重要です。お客様や同僚に対して、『おはようございます』、『こんにちは』、『こんばんは』などの挨拶を、適切なタイミングで行うようにしましょう。また、挨拶をする際には、お辞儀をすることが一般的です。お辞儀の角度やタイミングにも気を配りましょう。」 [シーン3: 時間厳守] トレーナー: 「時間を守ることも非常に重要です。お客様との約束や、チームミーティングなどには、必ず時間通りに、あるいは少し早めに到着するようにしましょう。もし、遅刻をしてしまう場合には、事前に連絡を入れるようにしましょう。」 [シーン4: 言葉遣い] トレーナー: 「言葉遣いは、相手に対する敬意を表すために非常に重要です。お客様や同僚に対しては、敬語を使うようにしましょう。また、ネガティブな言葉や否定的な表現は避けるようにしましょう。」 [シーン5: 服装] トレーナー: 「服装も、相手に対する敬意を表すために重要です。男性の場合は、スーツやジャケットを着用することが一般的です。女性の場合は、スーツやジャケット、ブラウスなどのフォーマルな服装が求められます。また、清潔感のある服装を心がけるようにしましょう。」 [クロージングシーン: 要点の振り返りと励まし] トレーナー: 「以上が、今日の研修の主なポイントです。これらのマナーやエチケットを守ることで、お客様や同僚との良好な関係を築くことができます。最初は難しいかもしれませんが、練習を重ねることで、自然と身についていきます。皆さんの活躍を期待しています!」 [エンドシーン: 会社のロゴと連絡先情報が表示される。] |

10 |

Table 4: Example of a question and response from the humanities category in Japanese MT-Bench, along with the corresponding score for PLaMo-100B-Instruct

On the other hand, in categories that require scientific consideration such as math, coding, and reasoning, the pre-trained model’s score was found to be low. As observed in the Jaster benchmark, improving the model’s ability in these categories would likely be crucial during the pre-training phase. However, in this study, we focused on how much the model’s scientific capabilities could be enhanced through post-training.

As previously mentioned, we developed a scalable data generation method to create a large number of high-quality math and coding datasets for post-training. After training on these datasets, the model’s performance in the math, coding, and reasoning categories significantly improved, although it still lagged behind GPT-4. Nonetheless, the model’s ability was comparable to GPT-3.5 in these categories after the post-training process.

Note that in this post-training, the user prompt from the chatbot_arena_conversations dataset was used. It was found that eight English MT-Bench prompts were included (leaked) in the dataset. Reference answers and Japanese MT-Bench prompts were not included. Since the leak was discovered after the post-training was completed, the dataset containing prompts generated from these prompts was not removed for this study.

Rakuda Benchmark

Rakuda Benchmark is a benchmark that evaluates the performance of conversation responses for questions related to Japanese domestic topics of geography, politics, history, and society. We used the judge prompts from MT-Bench to perform an absolute evaluation with a score of 10, and also conducted a relative evaluation using the prompts provided by the Rakuda Benchmark official, but did not calculate the rating from pairwise comparisons of many models as official evaluations do.

| Model Name | AVG | Geography | Politics | History | Society |

| PLaMo-100B-Instruct | 9.725 | 9.7 | 9.6 | 9.8 | 9.8 |

| GPT-4-0125-Preview | 9.55 | 9.6 | 9.75 | 9.5 | 9.35 |

| GPT-4-0613 | 9.375 | 9.4 | 9.6 | 9.5 | 9 |

| GPT-3.5-Turbo-0301 | 8.88 | 8.5 | 9.1 | 9 | 8.9 |

Table 5: Absolute Evaluation Results for Rakuda Benchmark

| PLaMo-100B-Instruct vs. GPT-4-0125-Preview | 42 wins, 36 losses, 2 ties |

| PLaMo-100B-Instruct vs. GPT-4-0613 | 58 wins, 21 losses, 1 tie |

Table 6: Pairwise Evaluation Results for Rakuda Benchmark. It is known that the pairwise evaluation may be biased by the order of presentation, so we evaluated 40 questions * 2 orders of presentation.

The perfect score for the absolute evaluation is 10 points, and in fact, the generated results from PLaMo-100B-Instruct did not contain any errors that the judge model could detect. The main difference in scores was due to the evaluation of the details of the answers. In the geographical field, knowledge was particularly important, and it seemed that the amount of Japanese data used during pre-training contributed to the accuracy of the knowledge. On the other hand, for the other three fields, the judge model often focused on critical aspects such as “perspective”, “impact”, and “challenges” which led to difficulties in achieving high relative evaluation scores compared to GPT-4-0125-Preview.

Here are some notes:

- The response generation for PLaMo and absolute evaluation were performed using in-house implementations for faster inference, without changing the generation parameters.

- The judge model was specified as gpt-4, and the evaluation was conducted using the gpt-4-0613 version of Azure OpenAI, which was the latest version available at the time of the final update of the Rakuda Benchmark official evaluation.

- The response data for GPT-4 0125-Preview was generated using the code provided in the Rakuda Benchmark repository. The GPT-4-0613 and GPT-3.5-Turbo-0301 responses were obtained from the published response data in the Rakuda Benchmark repository, with the gpt-4 version estimated from the date (20230713) included in the file names.

Conclusion

With the computational resources provided by GENIAC, we conducted pre-training and post-training for PLaMo-100B. We gained experience in training a 100B-scale LLM from scratch through this project. After completing the post-training, PLaMo-100B-Instruct demonstrated notable performance surpassing GPT-4 on Japanese-specific benchmarks, Jaster and Rakuda Benchmark.

However, this effort also revealed areas where there is still room for improvement (such as math and coding) compared to the state-of-the-art models. We utilize these insights in future model development.

In this post-training, we focused on creating a framework for dataset generation and training pipelines that are independent of specific models. It enables us to apply post-training to various models beyond PLaMo in the future. We also plan to accelerate applications in different industries by accumulating post-training know-how and preparing datasets.

Although we did not delve into the specifics in this blog, we are also actively considering the safety aspects of LLMs. For PFN’s stance on responsible technology development, please refer to “Responsibility/責任ある技術開発に向けて“.

Plamo beta free trial

We are now accepting applications for a trial API based on the PLaMo-100B-Instruct model starting from August 7. This is an opportunity for those who would like to experience PLaMo’s capabilities, those who wish to utilize it in their applications, and researchers engaged in R&D using large language models.

For more information, please refer to the press release.

Area

Tag