Blog

2018.10.15

New HCI group + upcoming papers and demos at UIST and ISS 2018

Fabrice Matulic

Researcher

Creation of HCI group

At PFN, we aspire to create next-generation “intelligent” systems and services, powered by cutting-edge AI technology, but we also recognise that humans will remain essential actors in the design and usage of such systems and therefore it is paramount to think about how the dialogue occurs. Human-Computer Interaction (HCI) approaches, which focus on bridging the gap between people and machines, can considerably contribute to improving intricate machine-learning processes requiring human intervention. With the creation of a dedicated HCI group at PFN, we aim to advance user-centred design for AI and machines and make sure the “humans in the loop” are supported with powerful tools when working with such systems.

Broadly, there are three main lines of research that the team would like to pursue:

- HCI for machine learning: Utilise HCI methods to facilitate complex or tedious machine-learning processes in which people are involved (such as data gathering, labelling, pre-processing, augmentation; neural network engineering, deployment, and management)

- Machine-learning for HCI: Use deep learning to enhance existing or enable new interaction techniques (e.g. advanced gesture recognition, activity recognition, multimodal input, sensor fusion, embodied interaction, collaboration between AI, robots and humans, generative model to create interactive content etc.)

- Human-Robot Interaction (HRI): Make communication and interaction between smart robots and their users natural, intuitive and hopefully even fun!

Of course, HCI does not necessarily involve machine learning or robots and we are also generally interested in creating novel and exciting interactive experiences.

The HCI group will benefit from the expertise of Prof. Takeo Igarashi, of The University of Tokyo, who has been hired as an external consultant. In addition to his wide experience in HCI and HRI, Prof. Igarashi has recently started a JST CREST project on “HCI for machine learning” at his lab, which very much aligns with our research interests. We look forward to a long and fruitful collaboration.

Papers and demos at UIST and ISS 2018

Although the group was just officially created, we have been active in HCI research for the past months already and we will present two papers on recent work, respectively at UIST this week and ISS next month.

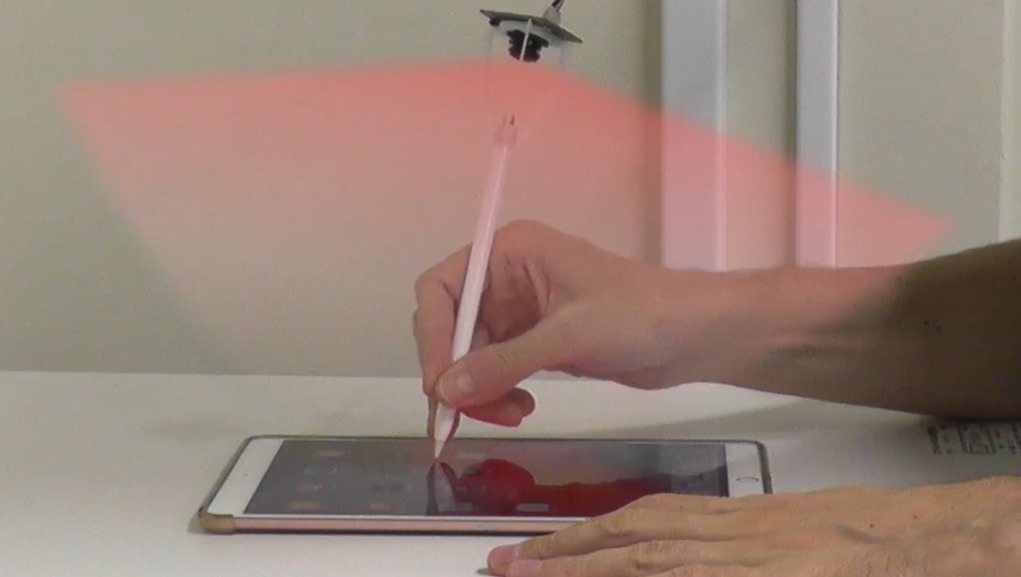

The first project, which was started at the University of Waterloo with Drini Cami and Prof. Dan Vogel, proposes to use different ways of holding a stylus pen while writing on a tablet to trigger different UI actions. The technique uses machine learning on the raw touch input data to detect these different pen grips when the user contacts the surface with the hand. The advantage of our technique is that it allows to rapidly switch between various pen modes using the same hand that writes and without resorting to cumbersome UI widgets.

In addition to the paper presentation, Drini will also be showing the technique at UIST’s popular demo session.

Our second contribution is the interactive projection mapping system for PaintsChainer that we showed at the Winter Comiket last year. For those of you who missed it, ColourAIze (which is how we call it in the paper) works directly with drawings and art on paper. Specifically, it projects colour fills determined by PaintsChainer directly onto the paper drawing with the colouring superimposed on the line art. Like with the web version of PaintsChainer, the ability to specify local colour hints to influence the colourisation is supported through simple (digital) pen strokes.

As with the pen-posture project above, we will both present our paper and do a demo of the system at the conference. If you’d like to try the fun experience of having your paper sketches, drawings and mangas coloured by AI, come and see us at ISS in Tokyo in November!

Last but not least, we are looking for talented HCI researchers to join our team, so if you think you can contribute in the areas mentioned above, please check the details of the position on our jobs page and apply!