Blog

2017.09.04

Deep Reinforcement Learning Bootcamp: Event Report

Tag

Shohei Hido

VP of Research and Development

Preferred Networks proudly sponsored an exciting two-day event, Deep Reinforcement Learning Bootcamp, which was held August 26-27th at UC Berkeley.

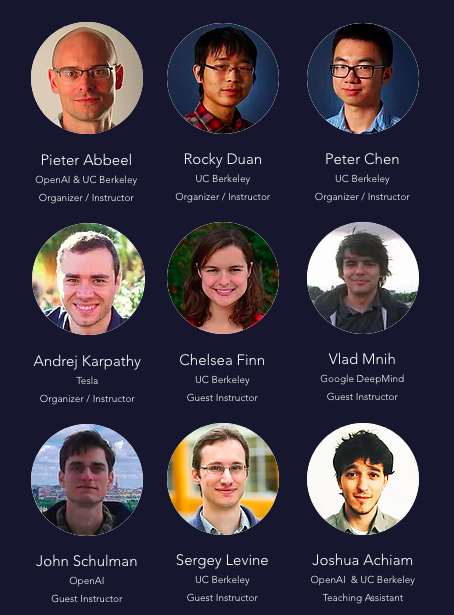

The instructors of this event included famous researchers in this field, such as Vlad Mnih (DeepMind, creator of DQN), Pieter Abbeel (OpenAI/UC Berkeley), Sergey Levine (Google Brain/UC Berkeley), Andrej Karpathy (Tesla, head of AI), John Schulman (OpenAI) and up-and-coming researchers such as Chelsea Finn, Rocky Duan, and Peter Chen (UC Berkeley).

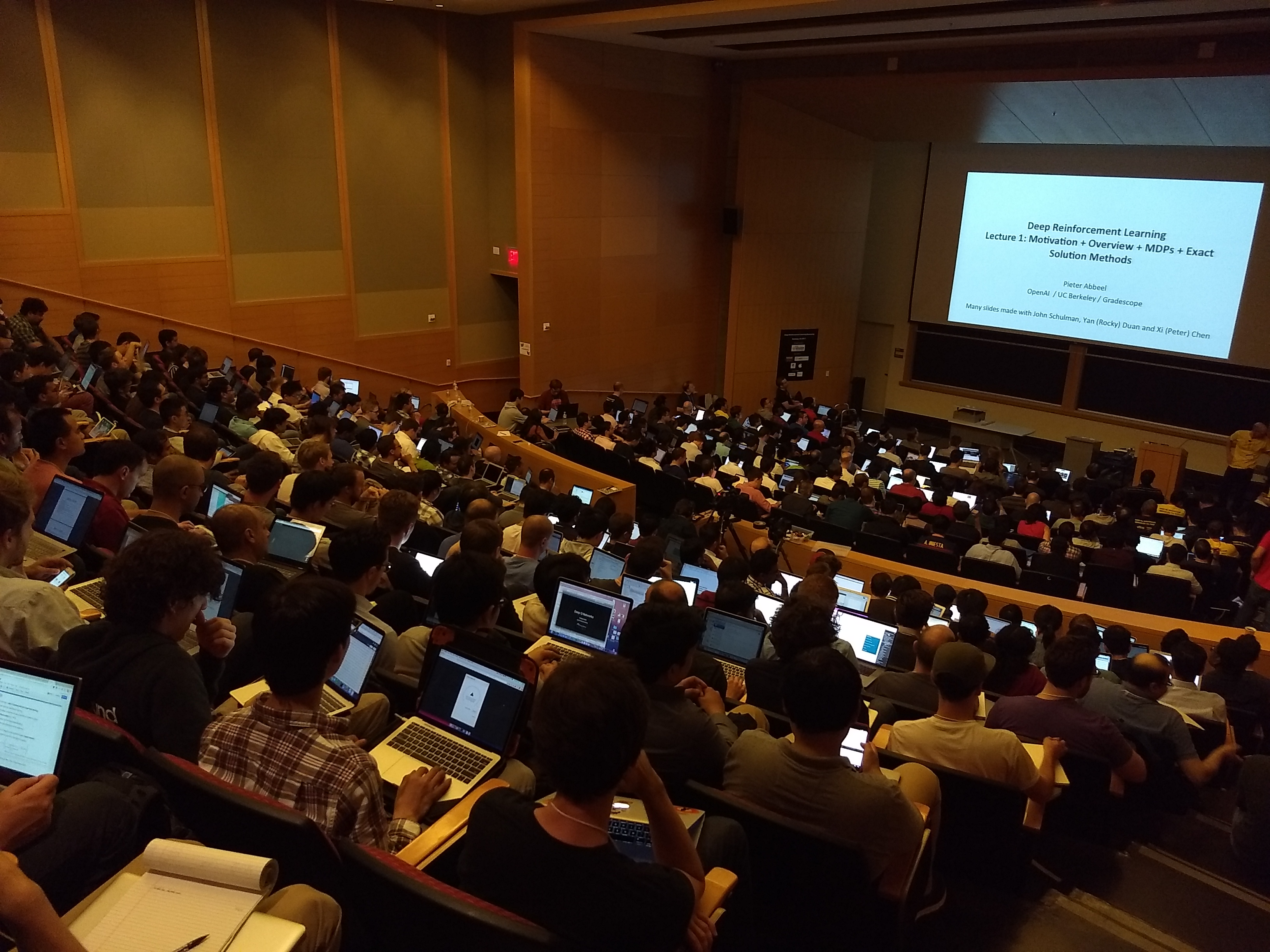

There were over 150 participants selected from all over the world, not only from universities but many industries as well. There were even some high-school students! I think the photo below shows the heat and energy in the overfull room (I was sitting on the stairs).

- RL Basics

- Policy Gradients

- Actor-Critic Algorithms

- Q-learning

- Evolution Strategies

Following a well-organized introduction to reinforcement learning by Pieter Abbeel, the lectures gradually went in to the detail of deep RL, step-by-step. Though there are many approaches in cutting-edge RL research, the instructors gave unbiased overviews of all approaches, by explaining not only its advantage but also the drawbacks. While policy gradient methods were introduced first based on their recent popularity, Mnih also presented deep Q-learning basics, and even the recent progress in evolution strategies was explained.

After the lectures, participants are allowed to walk around inside the building to work on the self-learning labs on their laptops using IPython notebooks. This made the event more engaging and fruitful than watching video lectures, by helping the participants implement deep RL algorithms and play with RL problems, with support from teaching assistants. I was particularly happy to see that Chainer was selected and used as the deep learning framework for the lab. (Please note that it was NOT related to our sponsorship as Instructor Peter Chen kindly clarified).

- RL trouble-shooting and debugging strategies

The highlight of the event, however, might be the deep RL tips and research frontiers lessons. John Schulman gave a down-to-earth lecture titled “The Nuts and Bolts of Deep RL Research”, with many hints on RL approaches that are only mentioned passingly in research papers. Though I’m not sure whether the slides will be released to the public, a participant summarized the talk and uploaded it on github (williamFalcon/DeepRLHacks).

- Current research frontiers

For experts, research frontiers talks by Mnih and Levine at the end of each day were also informative.

As one of the event sponsors, I had the opportunity to give a short introduction to Preferred Networks, and afterwards, many participants approached me during the breaks. Students were interested in our internship program, people from industries asked what PFN is doing with robotics, and practitioners wanted to understand the advantage of Chainer in deep RL. I also was able to talk to the instructors and teaching assistants. I really enjoyed talking with them and letting them know what PFN is up to.

I hope these connections will lead to meaningful cooperation in the future.

Tag