Blog

We released chainer-GAN-lib: the collection of Chainer implementation of recent GAN variants. This library is targeted to those who think “the progress of GAN is too fast and hard to follow”, “Experiments in GAN articles can not be reproduced at all”, “How can I implement the gradient penalty with Chainer?”

https://github.com/pfnet-research/chainer-gan-lib

Characteristics of chainer-GAN-lib

- Good starting point for R&D

You can modify this implementation or use it for performance comparison when developing something using GAN or when studying new GAN methods.

- Evaluated quantitatively

We have confirmed that these implementations can achieve performance close to the value described in the papers by inception score. However, since the core part of the algorithm is not completely reproduced, the performance may be somewhat different.

- Implemented gradient penalty based methods

We have implemented techniques using recently-proposed gradient penalty such as WGAN-GP, DRAGAN, and Cramer GAN. Since the current Chainer does not perform backward in a differentiable manner (it is scheduled to be handled from chainer v3, see https://github.com/chainer/chainer/pull/2970), so it is implemented by writing backward as forward instead.

Currently implemented algorithms are as follows:

- DCGAN (https://arxiv.org/abs/1511.06434)

- Minibatch discrimination (https://arxiv.org/abs/1606.03498)

- Denoising feature matching (https://openreview.net/pdf?id=S1X7nhsxl)

- BEGAN (https://arxiv.org/abs/1703.10717)

- WGAN-GP (https://arxiv.org/abs/1704.00028)

- DRAGAN (https://arxiv.org/abs/1705.07215)

- Cramer GAN (https://arxiv.org/abs/1705.10743)

Future plan

In the future we plan to extend this library on the following points. Your contribution by sending PRs will be also greatly appreciated.

- Evaluation metric

In addition to the inception score and FID score, another performance evaluation such as analogy generation, birthday paradox test etc. will be added

- Dataset

Toy data like two dimensional mixed Gaussian distribution or difficult data such as Imagenet

- Network

Currently we are testing ResNet

- Algorithm

Add new promising methods as needed

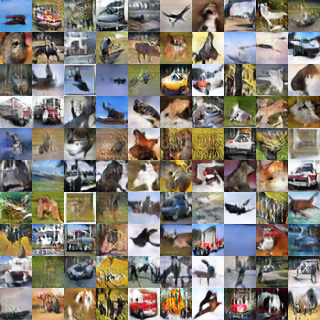

Generated examples (more results on github)

DCGAN

DFM

WGAN-GP

Tag