Blog

2017.09.11

Learning Discrete Representations via Information Maximizing Self-Augmented Training

Shohei Hido

VP of Research and Development

This is a guest post in an interview style with Weihua Hu, a former intern at Preferred Networks last year from University of Tokyo, whose research has been extended after the internship and accepted at ICML 2017.

“Learning Discrete Representations via Information Maximizing Self-Augmented Training,” Weihua Hu, Takeru Miyato, Seiya Tokui, Eiichi Matsumoto, and Masashi Sugiyama; Proceedings of the 34th International Conference on Machine Learning, PMLR 70:1558-1567, 2017. (Link)

– Please briefly introduce your ICML paper and its value.

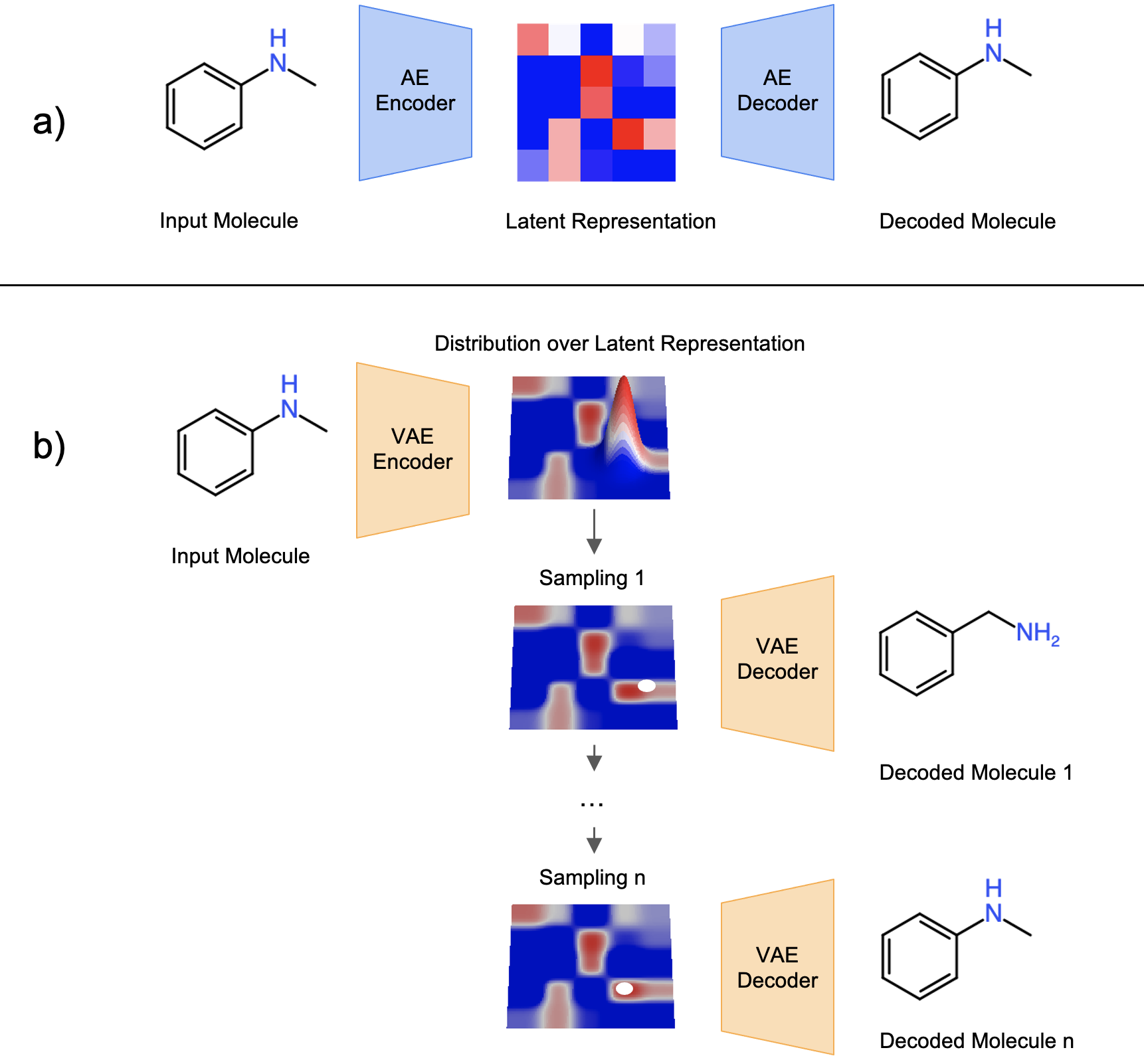

Sure. We propose a deep learning method for unsupervised discrete representation learning. The idea is to learn discrete representations such that they retain as much information about input data as possible while also being invariant to data augmentation transformation — data transformation that does not change the meaning of data (e.g., affine transformation and small perturbation on image data.) To our surprise, this simple learning method was able to achieve state-of-the-art performance on both clustering and hash learning in many benchmark datasets. Check out my github repository for the implementation!

– How did your presentation and poster at ICML go?

The oral presentation and poster went well. Lots of people came to talk to us and we exchanged ideas with each other. I also talked to a lot of people during the coffee time and some of them showed interest to our work.

– How was the conference itself? Could you tell us a few interesting papers that you found?

The conference size was really huge, like 2500 participants. At first, I was a bit overwhelmed by the size but later I enjoyed communicating with researchers from all over the world. Hope to meet them again at the next conference :). The most interesting paper for me was “Understanding Black Box Predictions via Influence Function” by Pang Wei Koh and Percy Liang from Stanford (Link). This paper won the best paper award and presented a new paradigm to interpret black box functions (I recommend reading the paper by yourself!) I think this paper will have huge impact on research in human interpretability and reliable machine learning at large.

– Did you find or experience anything surprising at ICML?

I was surprised to find so many topics in machine learning, most of which I am not familiar with. Even though machine learning research is quite hot nowadays, I was impressed by the fact that different research groups tackled different machine learning problems in their own original ways. Very impressed by human creativity!

– This paper is based on your work at PFN internship. How did you choose this topic first?

At the beginning of the internship, I was broadly interested in developing deep learning methods that can learn from limited labeled data. During the internship, I had a lot of discussion with my mentors and finally settled down to the current topic. i.e., unsupervised representation learning.

– The co-authors include your mentors and Prof. Sugiyama, your supervisor. How did you write the paper with them?

I wrote the draft, and my mentors and Prof. Sugiyama gave me valuable comments to improve it. I was really lucky to work with such great co-authors!

– Please tell us more about your internship. Where and when did you find it?

My internship at PFN was like working in a research laboratory with great mentors and friendly internship participants — an ideal internship experience for me! At the time when I applied for the internship, PFN was already a famous AI company, so it is very natural for a machine learning student like me to apply for the internship.

– Why did you choose PFN internship and how was the interview process?

I chose PFN internship because PFN offers a research-oriented internship program and has excellent team members to work with. The interview process started with CV screening. Then, I completed a take-home coding assignment and had an on-site interview. I think I had a good experience during the whole process and I recommend everyone interested in AI research to give it a try!

– What do you think is the unique benefit of PFN internship after all?

I think the unique benefit is that interns can work with excellent members in PFN. At the office, I was able to reach out to the great team members to have discussion and learn new things.

—

PFN summer internship 2017 has already begun at much bigger scale, also featuring students from outside of Japan. The application for 2018 summer internship is now open to the students outside of Japan (Due 9/29). We are looking forward to receiving your application.