Blog

Writers: Tommi Kerola, Shintarou Okada, Shirou Maruyama

Preferred Networks (PFN) was present at the IEEE IV 2017 conference in Redondo Beach, CA, US, one of the flagship conferences for discussing research and applications for intelligent vehicles, including techniques for autonomous driving. Autonomous driving is one of the fields of main importance for PFN, which is why three of our members attended the conference in order to learn more about the latest research, and to connect with people from both academia and the industry. In this blog post, we will briefly summarize trends from this conference, focusing mainly on perception and motion planning.

The Intelligent Vehicles Symposium (IV) is an established annual conference on intelligent vehicles; this was the 28th time (since 1989 in Tsukuba, Japan). More than 400 researchers and practitioners participated in the conference, where over 1120 authors published their papers. The conference took place at the Crowne Plaza Redondo Beach And Marina Hotel, which served as the base for four days of not only interesting talks and posters, but also industry exhibitions and workshops.

Bosch and NVIDIA autonomous driving cars at the exhibition area.

Perception

In terms of perception research for intelligent vehicles, we found that fish-eye cameras enjoyed a larger presence than previously. These cameras capture a wide field-of-view, and are useful for being able to detect objects present at various positions around the vehicle from a single camera image. While monocular- and stereo-based camera approaches towards perception were popular as expected, we also found that lidar-based approaches are still dominating, despite it having certain weaknesses compared to standard cameras, such as high cost, sparse rays at long distance, and difficulties with reflective surfaces.

(Hänisch et al. “Free-Space Detection with Fish-Eye Cameras”, IV 2017)

At PFN, we develop our own deep learning framework Chainer, and are naturally interested in deep learning-based approaches for autonomous driving. While we found that several papers employ deep learning based techniques for perception, such as exploiting efficient model architectures for semantic segmentation, a substantial amount of the research presented at the conference did not yet make use of neural networks. Compared to computer vision conferences such as CVPR, where deep learning is prevalent among papers, we found that many methods presented at IV tended to still use hand-crafted features. We suspect this might reflect some of the current requirements for existing automakers, which requires researchers to come up with solutions that are able to operate on limited hardware.

(Ramos et al. “Detecting Unexpected Obstacles for Self-Driving Cars: Fusing Deep Learning and Geometric Modeling”, IV 2017)

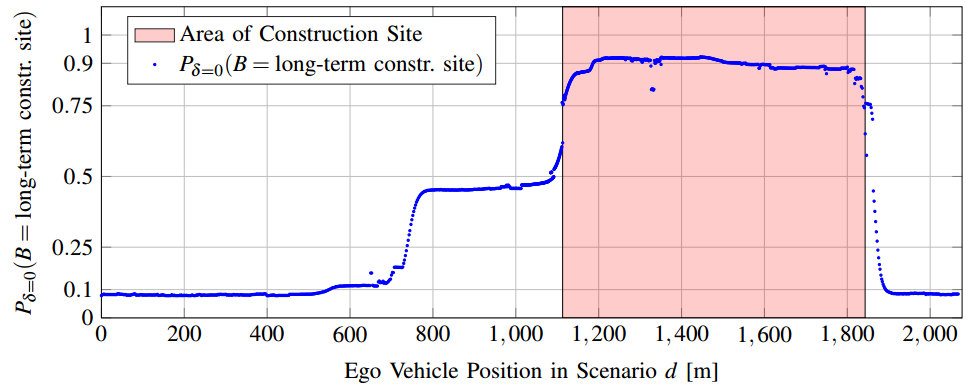

Finally, several papers focused on proposing new or rather vehicle-specific vision problems, rather than improving upon the state-of-the-art in existing old computer vision problems. Such rather new problems include unexpected obstacle detection, construction site recognition, and the unconstrained recognition of auxiliary traffic signs, which refers to signs that are placed underneath standard road signs, often changing the intended meaning completely.

(Kunz and Schreier. “Automated Detection of Construction Sites on Motorways”, IV 2017)

(Wenzel et al. “Towards Unconstrained Content Recognition of Additional Traffic Signs”, IV 2017)

Motion Planning

In addition to perception mentioned above, motion planning is a necessary component for developing an autonomously driving car. A motion planning module decides how to control the car in order to drive safely based on the perception results, such as localization, other cars’ states and static obstacle locations.

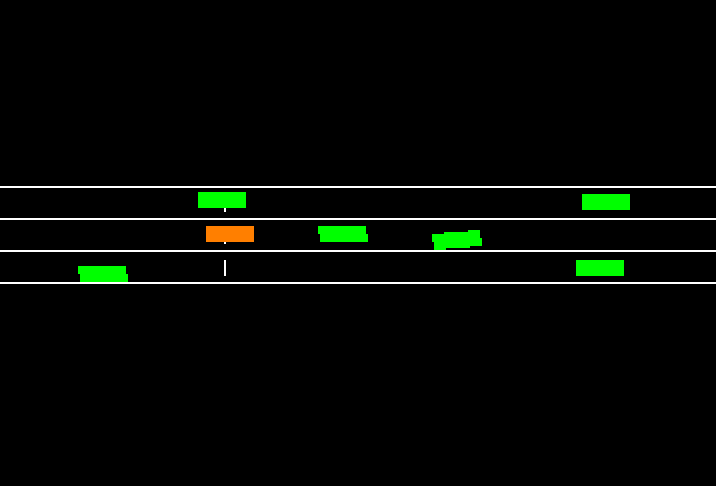

(Conceptual figure of traffic situations.)

Many presentations at the conference focused on motion planning in specific situations (lane changing, merging points, car following, intersections, parking, etc.). Most of them tend to use model-based approaches, such as model predictive control, which solves an optimization problem on a finite time-horizon, rather than leveraging machine learning approaches.

Deep learning methods seem to be mainly used for prediction of drivers’ behaviors or to develop an end-to-end control model. Behavior prediction is used for estimating the future states of vehicles in order to avoid risks like collision accidents. End-to-end control method concerns learning a prediction model that outputs control values (acceleration, steering, etc.) in the next time step directly from raw camera images. We could not find end-to-end control approaches that handle complicated traffic situations, which is why we had the impression that such approaches have much room for improvement compared to model-based approaches.

Many researchers tend to evaluate methods on a simulator rather than on an actual machine. This makes sense because experiments with actual machines, including cars, tend to be more risky and expensive. When developing a simulator for motion planning, the most difficult problem is how to mimic other vehicles’ naturalistic behavior. This is because mimicking other vehicles’ behaviors is a chicken-egg problem in the development of autonomous driving cars. In fact, there is no de facto standard simulator yet in the motion planning community, so almost all researchers use self-made simulators. On such simulators, other vehicles’ behaviors tend to not be simulated well, e.g., they move continuously, ignoring their surrounding vehicle’s behavior. Therefore, it seems to be a common problem as for how to evaluate methods in a fair and objective manner.

At the conference, there were sessions and a workshop about naturalistic driving data (NDD) . NDD is a kind of dataset containing various data about driving: observed sensor data and control values of autonomous driving cars, object detection results, motion path, human drivers’ behaviors, etc. Some researchers introduced how to obtain natural driving behavior from NDD, and how to extract meaningful information from huge sets of unlabeled NDD. For this task, NGSIM is a popular NDD dataset that was published by the U.S. transportation division in 2006. This dataset contains sets of movies recorded at roads by a fixed camera, and car tracking information is sampled each 100 milliseconds.

(Bird’s eye view image from the NGSIM dataset.)

While NGSIM is commonly used, there are still few NDD datasets that we can use freely like NGSIM. We suppose this is because the researchers cannot publish such data without authorization due to privacy concerns or because the required equipment is expensive. To tackle the problem of publicly available datasets being scarce, in this conference, there were some projects that focus on aggregating and organizing public datasets, containing traffic scenarios, road networks, vehicle dynamics, open source maps, etc. Open innovation might be able to solve this problem, as Baidu recently announced Project Apollo with various stakeholders in autonomous driving.

Area

Tag