Blog

What is a 3D camera?

In recent years, 3D cameras have been increasingly used in computer vision applications. Fields such as robotics, autonomous driving, quality inspection etc., nowadays benefit from the availability of different types of 3D sensing technologies. Leveraging the extra data provided by such sensors allows for better performance on tasks such as detection and recognition, pose estimation, 3D reconstruction and so forth. This blog post presents an overview of the most common 3D sensing technologies that are available on the market and their underlying mechanisms for generating 3D data.

Digital (color) cameras capture light that enters the optical system through a hole known as aperture. Internally, a grid (or an array) of photosensors returns the electrical current for each sensor, when it is struck by the incoming light. The different current levels are combined into a composite pattern of data that represents the light, ultimately encoded with three different channels known as RGB (reg, green, blue).

A 3D camera provides 3D data related to the objects that are within its spectrum of visibility. Some of them encode (for each pixel) the distance (depth) to the objects, while others output 3D coordinates. In some cases, color information can be appended to it, to obtain what is commonly known as RGBD or RGBXYZ data. Nevertheless, this often requires two cameras – one which outputs color and one which outputs 3D data – which are in-sync and calibrated (i.e., a roto-translation between them is known, as well as the lens distortion parameters).

What are the most common technologies?

Most cameras currently available on the market are centered around four major 3D sensing technologies:

Stereo vision

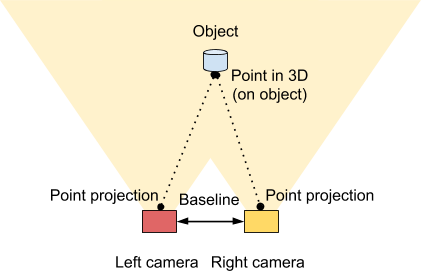

Stereo vision follows the same functioning principles as the human eyes. Two cameras are placed next to each other at a given distance (known as baseline) and triggered at the same time. This results in two images (left and right) in which the same object is seen. Point-to-point correspondences are computed between the two images. Given the roto-translation between the cameras and the lens distortion parameters, the point-to-point correspondences can be triangulated to obtain the respective location in the 3D space.

Basic principles of stereo vision

This technology has the advantage of a very low implementation cost, as most off-the-shelf cameras can be used. It is also flexible with regards to the selection of the point correspondence matching algorithm, which, in turn, can provide more or less accurate data.

However, computing fast and accurate point-to-point correspondences is a challenging problem and the depth estimation error increases quadratically with the distance to the camera (assuming a pinhole camera model). Moreover, in order to recover point-to-point correspondences, the object needs to be textured, which is not always a good real-world assumption.

Some popular cameras from this category are the Bumblebee line from FLIR systems, ZED from StereoLabs, Karmin from Nerian Vision Technologies etc.

Time-of-Flight (ToF)

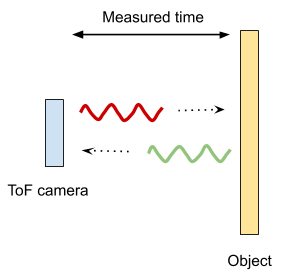

Time-of-flight uses of an artificial light signal (provided by a laser or an LED) that travels between the camera and the object. This technology measures the time it takes for the light to travel to and bounce off objects, in order to estimate distance.

Basic principles of time-of-flight (ToF)

In general, they provide denser 3D data (compared to stereo vision), while operating at larger frame rates. They are also easier to configure compared to stereo cameras, since no point correspondence matching is needed.

However, some limitations of this technology include relatively low resolutions and the sensitivity to the reflectance properties of the object’s material. Therefore, suboptimal results are often obtained with objects that are either transparent or highly reflective.

Some popular cameras from this category are DepthSense from SoftKinetic, Kinect from Microsoft, Senz3D by Creative Labs, Helios from LUCID Vision Labs etc.

Structured light

Structured light cameras project a known pattern onto the object located within the field of view and 3D data is obtained by analyzing the deformation of its projection.

Such cameras usually provide superior quality to stereo vision cameras for close distances, since the projection of the pattern provides a clear, predefined texture needed for point-to-point correspondence matching.

However, since correspondence matching is still an expensive operation, they often operate at lower frame rates compared to other cameras (such as ToF) and require tuning of the matching parameters.

Some popular cameras from this category are the RealSense line from Intel and Ensenso from IDS Imaging.

Laser triangulation

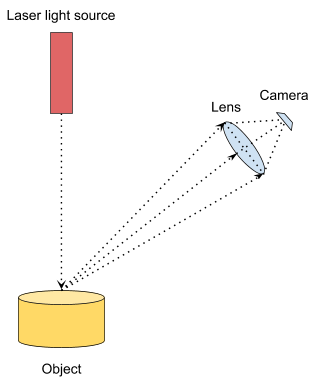

Laser triangulation technology uses a 2D camera and a laser light source. The latter projects a line onto the object in the field of view, whose projection deforms under the curvature of the object’s surface. Using the 2D camera, the position coordinates of the lines in multiple images are determined, which allows for the calculation of the distance between the object and the laser light source.

Basic principles of laser triangulation

Laser triangulation has multiple advantages over other 3D sensing technologies. It provides high quality data without a lot of computational burden. Also, lasers are relatively cheap, have a very long lifetime and can cover a large spectrum of material properties.

However, due to the need for scanning the object, the process can be slow, making it impractical for real-time applications.

Which camera should I use for my project(s)?

There is no single 3D sensing technology that is suitable to all projects. Each of them is more or less robust to different object surface properties or changes in lighting conditions, provides denser or sparser 3D data, operates at different speeds etc., making it more or less attractive for the task at hand.

To be able to decide on the proper technology, one needs to answer the following questions first:

- What is the maximum allowed precision error for the given project?

- Which type of objects is the project handling? What are their surface properties?

- What is the working distance range?

- What is the budget for the 3D sensor?

- Is real time processing of data required?

Stereo vision and structured light sensors are typically good choices for use cases that involve measurements of 3D coordinates/lengths, i.e. where high accuracy is important and the objects are within a relatively small working distance range. The downside is that obtaining high resolution data comes at the cost of high computational cost (and, therefore, low frame rates). Also, the parameters of the matching algorithms may turn out difficult to configure and need to be adjusted depending on the environment (lighting conditions, material properties etc.).

Time-of-flight cameras are typically used in settings such as autonomous robots (i.e., indoor navigation) or palletting tasks, where precision can be compromised in exchange for speed. They are usually easy to configure and cheaper than stereo/structured light cameras, but also more sensitive to the reflectance properties of the objects.

Laser triangulation are typically good choices for applications such as quality control or measurements of coordinates/length, which require high precision data even for low contrast objects. This comes at the cost of speed, since the scanning process needs to stop for the changes to the laser line to be recorded.

References:

https://en.wikipedia.org/wiki/Time-of-flight_camera

https://en.wikipedia.org/wiki/Stereo_camera

https://en.wikipedia.org/wiki/3D_scanning

https://www.baslerweb.com/en/vision-campus/camera-technology/3d-technology/

https://www.ti.com/lit/wp/sloa190b/sloa190b.pdf

https://senseit.nl/tech-talk-the-advantages-and-disadvantages-laser-triangulation/

Area