Blog

This post is contributed by Naoki Matsumoto, who was an intern at PFN and is currently working as a part-time engineer.

Introduction

Hello, my name is Naoki Matsumoto. I am a first-year Ph.D. student at the Graduate School of Informatics at Kyoto University. During my internship at Preferred Networks, Inc. (PFN), I worked on improving the usability of object storage and distributed cache systems operated by PFN under the theme of “Accelerating Machine Learning/Deep Learning Workloads Using Cache”. In this article, I introduce meta-fuse-csi-plugin, a CSI driver that can be used with any FUSE implementation.

Storage Environment at PFN

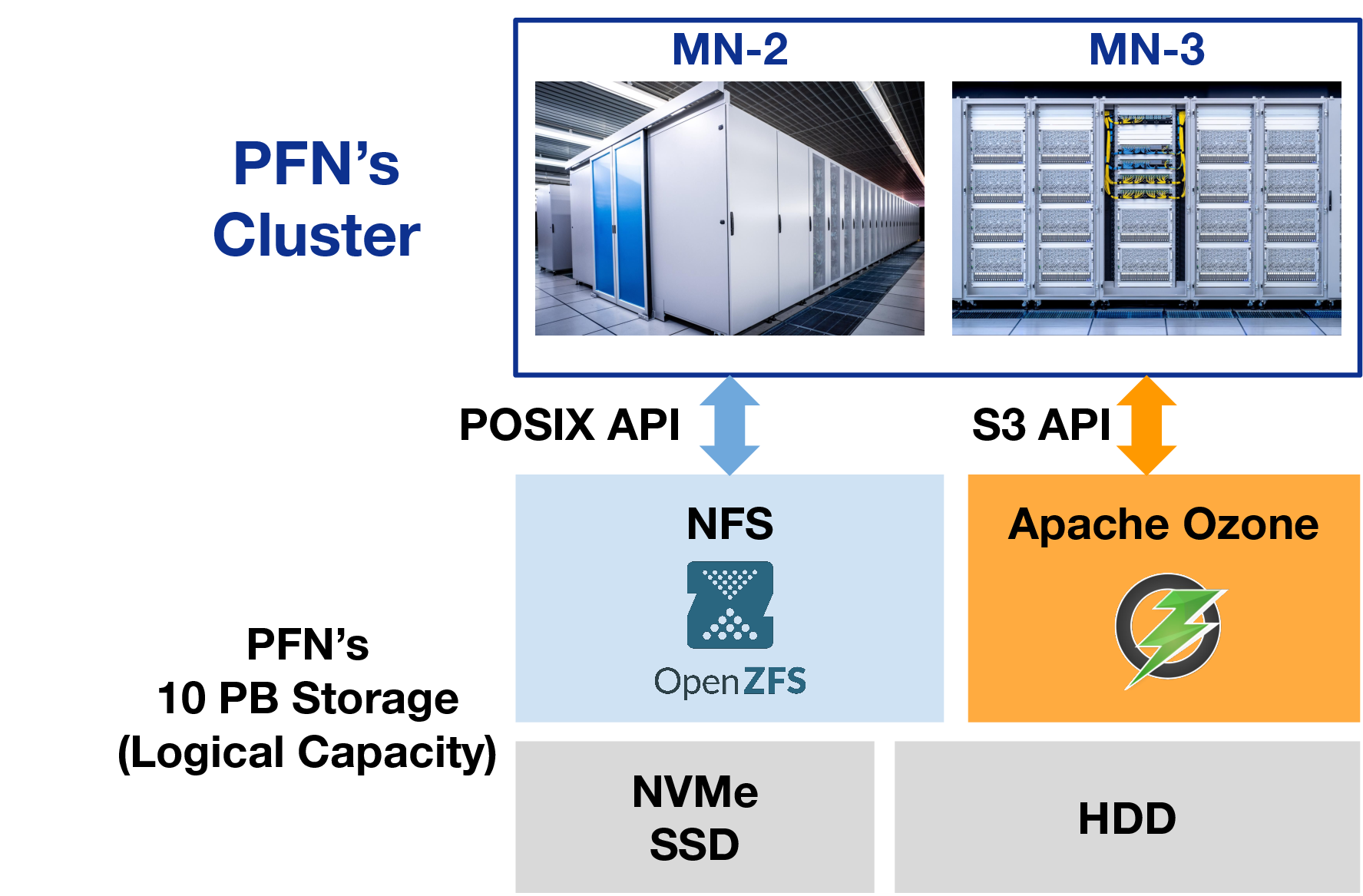

PFN internally runs NFS servers [1], object storage with Apache Ozone [2][3] and distributed cache system [4].

Fig.1 Storages in PFN

Apache Ozone [5] provides an S3 compatible API. The S3 API, including GetObject and ListObjects, has become the de facto standard API in object storage. Users can use software for S3 API like aws-cli [6] and aws-sdk [7] to access S3 API compatible object storage systems.

In general, S3-compatible object storage systems like Apache Ozone don’t have POSIX API. It is because they are designed to achieve high performance and scalability in contrast to usual file systems. Therefore, familiar tools are not available like “ls” for obtaining a list of objects or “less” for viewing the content of an object. This may not be a problem in environments with storages only with S3 API. However, in environments like PFN which provide NFS with POSIX API and object storages with S3 API, users and software need to consider both API and it increases the cost to develop and operate it.

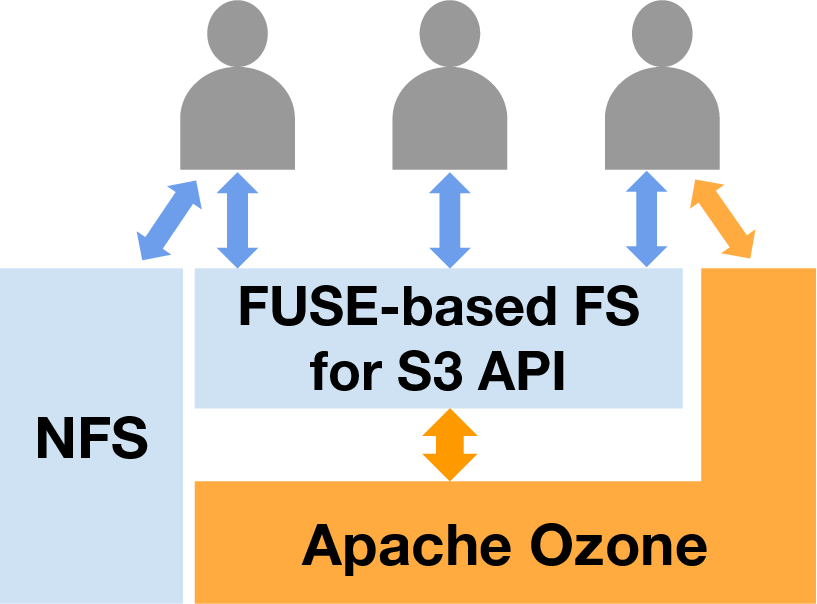

Various tools (like mountpoint-s3 [8]) have been developed to use object storage as it were a local file system. Using these tools, you can use a POSIX API as the interface. As shown in the figure below, users can access object storage with usual tools and software, and it improves user experience on storages effectively.

Fig.2 Transparent use with FUSE-based FS for S3 API on Apache Ozone

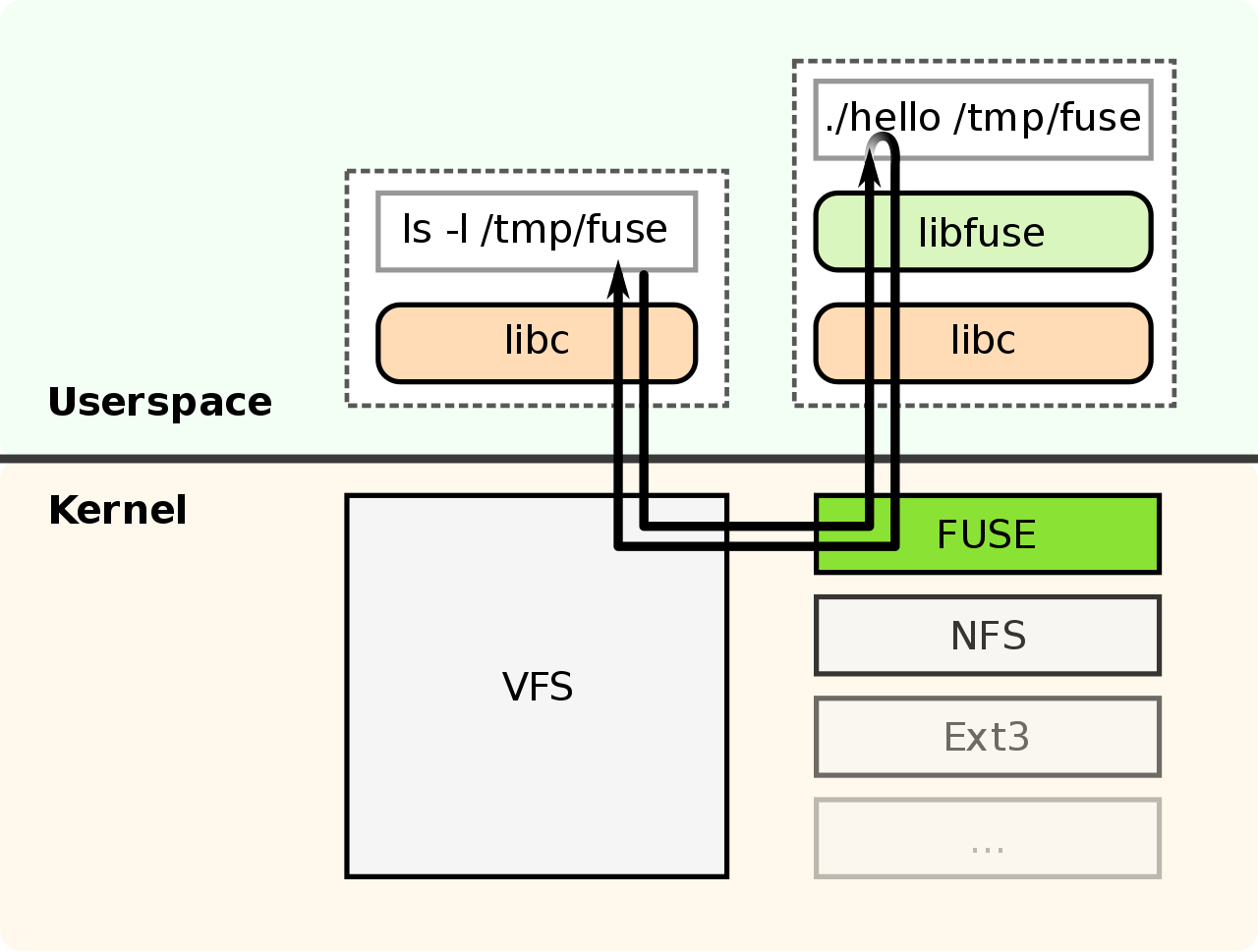

These implementations use FUSE (Filesystem in UserSpace) [9], which is a function of the Linux kernel. FUSE enables file systems to run in user-space. Because of this, developers can use their favorite languages like C, Go, or Rust. Moreover, even if the file system implementation crashes, it doesn’t involve the whole kernel but just dies alone.

Fig.3 Overview of FUSE (cited from https://commons.wikimedia.org/wiki/File:FUSE_structure.svg )

Issues on Running FUSE in Kubernetes

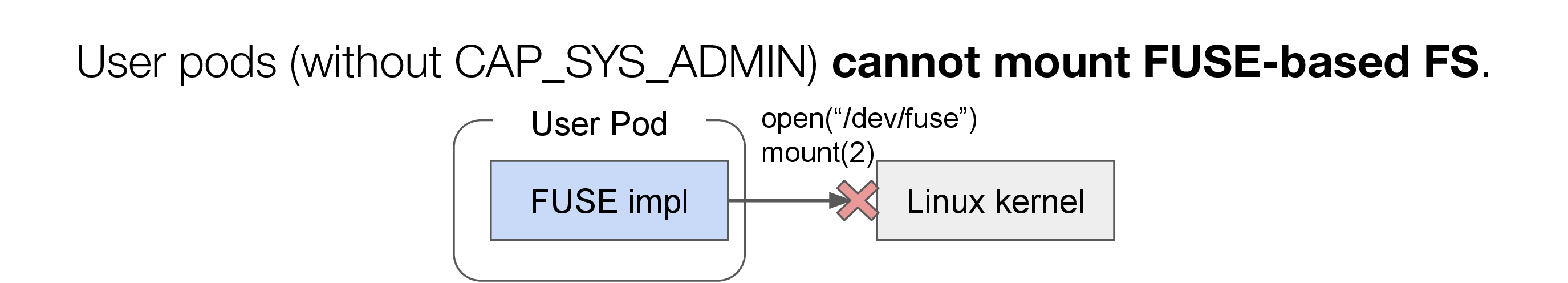

From a security perspective, Pods used by normal users are not assigned with CAP_SYS_ADMIN in PFN. Therefore, normal users cannot use FUSE in their Pods as it is.

As mentioned above, FUSE is one of the Linux kernel functionalities. it provides a device file, /dev/fuse as an interface to use it. To mount a file system implemented by FUSE, you need to open /dev/fuse through open(2) to obtain a file descriptor (fd) to communicate with the FUSE implementation. Also, you need to specify the mount point through mount(2). To mount the FUSE file system inside the Kubernetes Pod, /dev/fuse should be exposed in the Pod, and “CAP_SYS_ADMIN” capability needs to be assigned to the Pod. CAP_SYS_ADMIN allows operations affecting the entire system, like operating on device files like /dev/fuse, or mount(2). Therefore, while assigning CAP_SYS_ADMIN enables Pods to use FUSE, it also makes it possible to manipulate other user’s Pods or the system itself.

Fig.4 Problems to mount FUSE in Kubernetes Pods

Kubernetes systems provided on cloud services have the same issue. Google Cloud Platform provides gcsfuse [10], a FUSE implementation for Google Cloud Storage (GCS). To use gcsfuse on Google Kubernetes Engine (GKE) directly, it requires CAP_SYS_ADMIN. However, assigning such elevated privileges to user Pods should be avoided as much as possible in terms of security. Therefore, they provide gcs-fuse-csi-driver [12] as a dedicated Container Storage Interface (CSI) driver [11]. By using the gcs-fuse-csi-driver, users can mount buckets with gcsfuse in their Pods without assigning CAP_SYS_ADMIN.

During my internship, I aimed to develop a more generic CSI driver that enables any FUSE implementations to run inside users’ Pods without CAP_SYS_ADMIN, similar to gcs-fuse-csi-driver.

Inside gcs-fuse-csi-driver

First, I looked into the gcs-fuse-csi-driver, which runs gcsfuse in a Pod for a normal user. A Kubernetes Pod manifest as below is used to mount volume provided by gcs-fuse-csi-driver.

apiVersion: v1

kind: Pod

metadata:

name: gcs-fuse-csi-example-ephemeral

namespace: NAMESPACE

annotations:

gke-gcsfuse/volumes: "true"

spec:

terminationGracePeriodSeconds: 60

containers:

- image: busybox

name: busybox

command: ["sleep"]

args: ["infinity"]

volumeMounts:

- name: gcs-fuse-csi-ephemeral

mountPath: /data

readOnly: true

serviceAccountName: KSA_NAME

volumes:

- name: gcs-fuse-csi-ephemeral

csi:

driver: gcsfuse.csi.storage.gke.io

readOnly: true

volumeAttributes:

bucketName: BUCKET_NAME

mountOptions: "implicit-dirs"

Manifest to use gcs-fuse-csi-driver

(cited from https://cloud.google.com/kubernetes-engine/docs/how-to/persistent-volumes/cloud-storage-fuse-csi-driver)

With the above manifest, the file system provided by gcsfuse is mounted on /data in a busybox container.

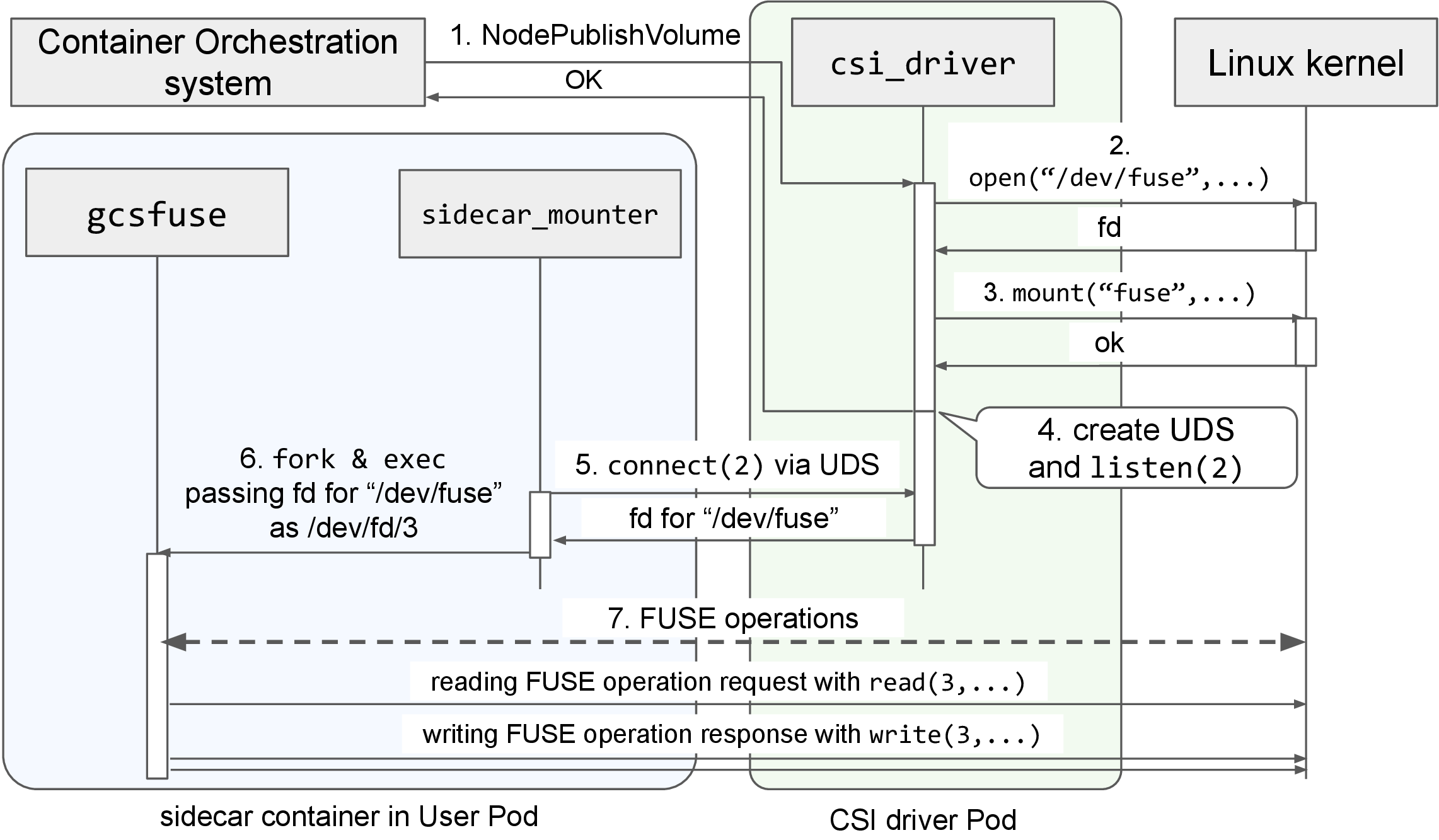

Internally, the mounting process is handled by the CSI driver. The gcs-fuse-csi-driver has two components. One is a CSI driver Pod, created and operated by the cluster administrator. This Pod is a privileged Pod, including CAP_SYS_ADMIN permission, and has the authority to perform operations such as /dev/fuse open(2) and mount(2). The Pods are deployed on each node as a DaemonSet. The other is a sidecar_mounter, which is a sidecar container in a Pod created and operated without privileges.

In the sidecar container inside a general user’s Pod, the sidecar_mounter and gcsfuse are running. This sidecar container is created within the Pod using gcs-fuse-csi-driver by a MutatingWebhook.

The flow until the gcsfuse is mounted is as follows.

- The Container Orchestration system calls NodePublishVolume to the csi_driver.

- It opens /dev/fuse using open(2) and obtains a file descriptor (fd) necessary for FUSE operation.

- Specifies the obtained fd and other mount options and performs mount(2).

- It creates a UNIX Domain Socket (UDS) in the emptyDir mounted to the sidecar container. A thread is launched to listen for connections on the created UDS via listen(2), and completes NodePublishVolume.

Note: Each CSI driver Pod has /var/lib/kubelet/pods/ mounted, so it can operate on the emptyDir mounted to other Pods running on the same node via the host file system. - The sidecar_mounter connects to the UDS in the emptyDir mounted to its container via connect(2), and receives the fd for FUSE processing, which has completed mount(2).

- The sidecar_mounter specifies the received fd for FUSE processing in exec.Cmd’s ExtraFiles (passed as /dev/fd/3) and launches gcsfuse.

Note: The fd specified in ExtraFiles is inherited when the sidecar_mounter launches the gcsfuse process via fork(2) + execve(2). - gcsfuse uses the fd of the specified path (/dev/fd/3) to communicate with the kernel.

Fig.5 Inside gcs-fuse-csi-driver

In FUSE, the user-space implementation and the fuse module inside the kernel communicate by performing read(2)/write(2) on the fd obtained by opening “/dev/fuse”. Only CAP_SYS_ADMIN is required for the opening “/dev/fuse” and mount(2) processes. Once the mount process is completed, the subsequent processes can be performed without CAP_SYS_ADMIN. In gcs-fuse-csi-driver, the privileged CSI driver Pod performs processes requiring CAP_SYS_ADMIN. Then, by passing the fd necessary to the sidecar container inside the normal user Pod via UDS, gcsfuse can operate even without CAP_SYS_ADMIN on the user’s Pod.

Note: in UDS, a type of auxiliary message called SCM_RIGHTS allows for the passing of file descriptors between processes. This mechanism is used when segregating processes that require special privileges, like in this case.

meta-fuse-csi-plugin: A CSI Driver for All FUSE Implementations

As mentioned earlier, gcs-fuse-csi-driver operates gcsfuse within a user Pod by performing only CAP_SYS_ADMIN necessary processes in a privileged Pod and doing the rest in a user Pod with normal permissions. However, gcs-fuse-csi-driver was specifically designed for gcsfuse, and users cannot freely switch and use FUSE implementations.

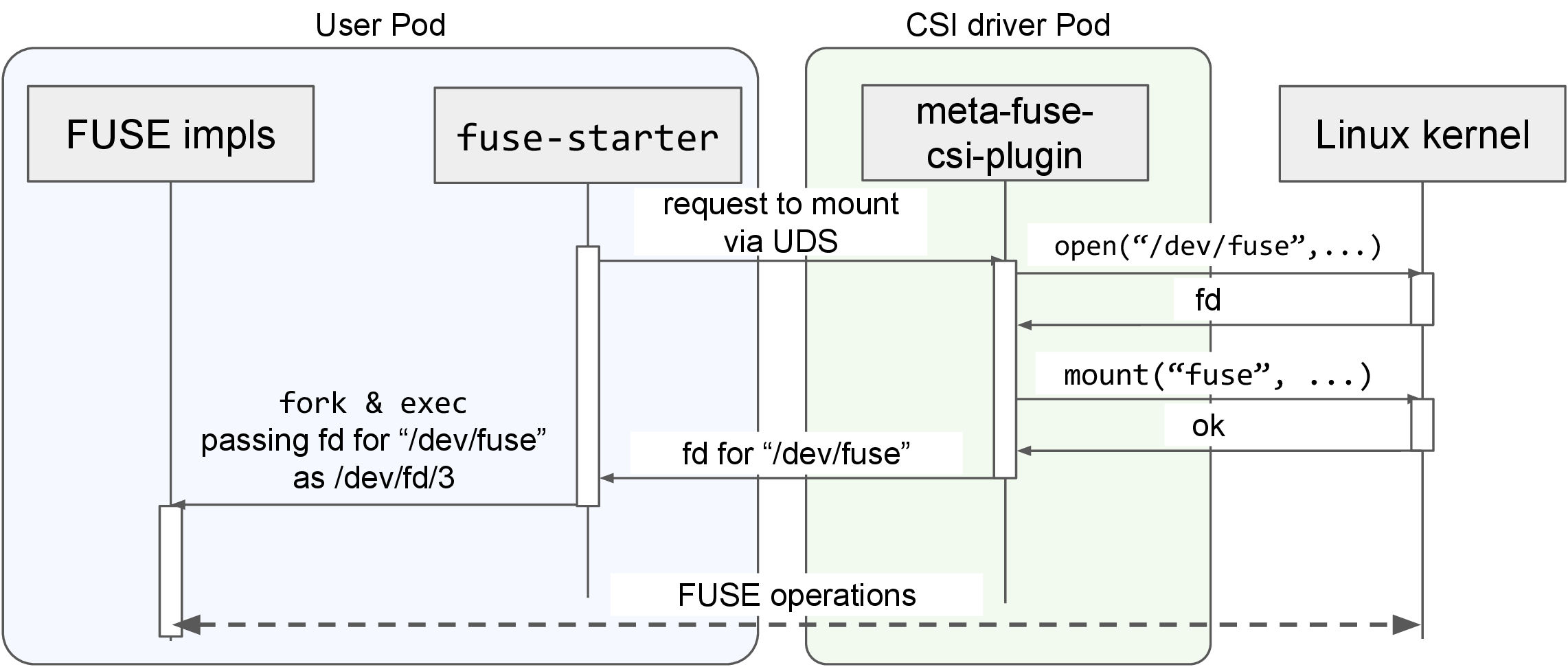

Therefore, I developed a CSI driver called meta-fuse-csi-plugin, which allows various FUSE implementations to run within a user’s Pod.The meta-fuse-csi-plugin provides two mounting methods to support as many FUSE implementations without modification as possible.

- Direct fd passing approach (fuse-starter)

- Modified fusermount3 approach (fusermount3-proxy)

1. Direct fd passing approach (fuse-starter)

In this method, the FUSE implementation is launched using the same approach as with the gcs-fuse-csi-driver. As for libfuse3 [13], a FUSE user library, when “/dev/fd/X” is specified as the mount point, libfuse3 will interpret X as the file descriptor for “/dev/fuse”, and perform FUSE operations. Similarly, the jacobsa/fuse, a FUSE library used by gcsfuse, provides equivalent functionality.

When this method is used, similar to gcs-fuse-csi-driver, fuse-starter receives the file descriptor from the CSI driver and mount the FUSE implementation and passes the fd when launching the FUSE implementation.

Fig.6 Mount procedure with fuse-starter

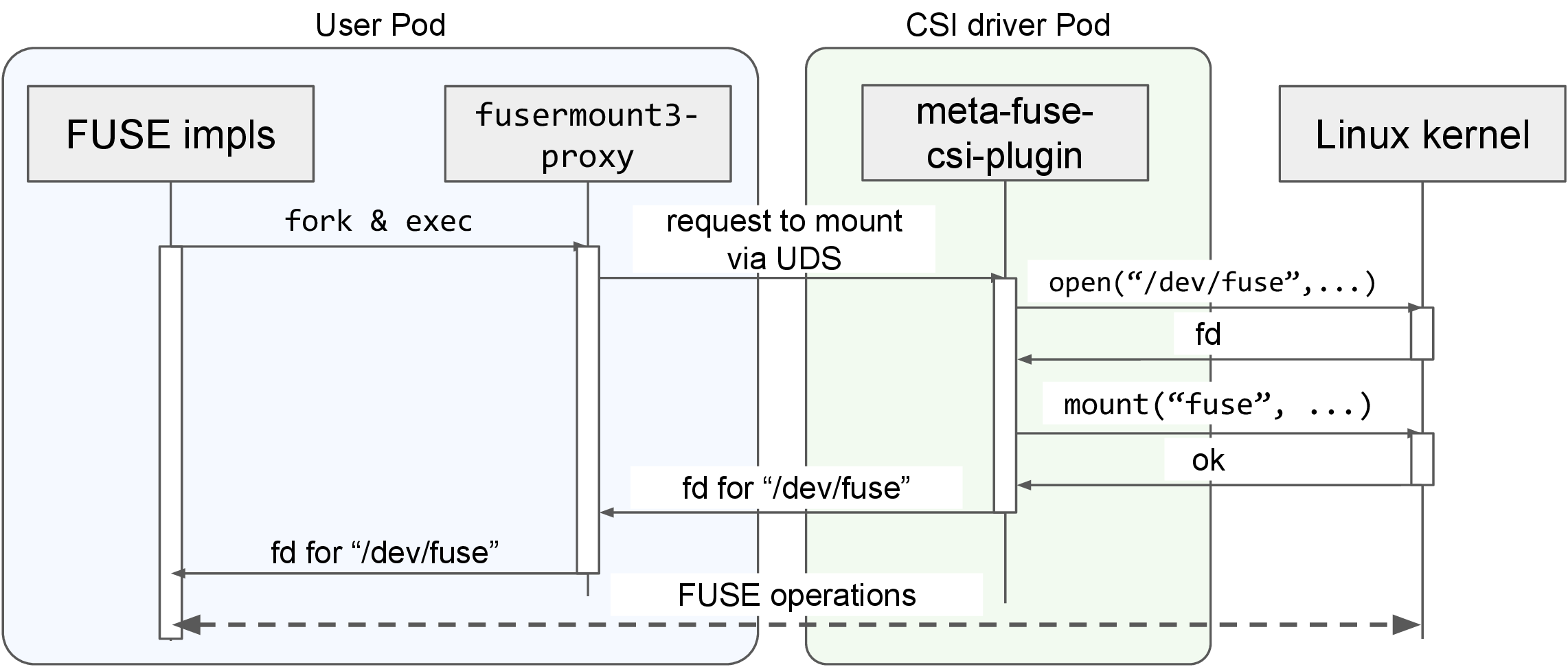

2. Modified fusermount3 approach (fusermount3-proxy)

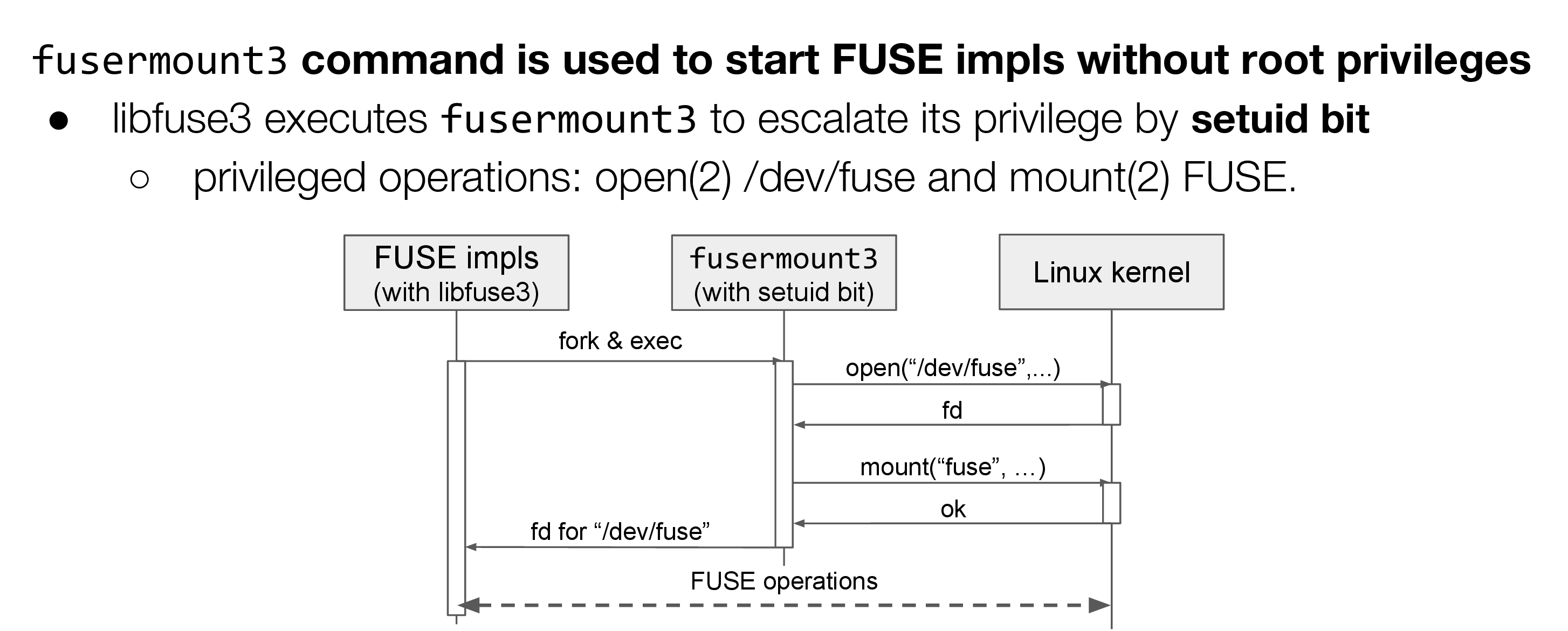

In FUSE implementations using libraries like the “fuser” Rust crate, which does not accept /dev/fd/X as fd for /dev/fuse, the fuse-starter method cannot be used. Therefore, we implemented another method using the fusermount3 mechanism.

fusermount3 allows normal users to mount FUSE implementations implemented with libfuse3 without privileges. fusermount3 has a setuid bit and can perform “/dev/fuse” open(2) and mount(2) without privileges. The FUSE implementation runs with the normal user’s privileges and executes fusermount3 to mount and get a fd for FUSE.

Fig.7 Mount procedure with libfuse3 and fusermount3

Exploiting this mechanism, we developed a fusermount3-proxy that communicates with the CSI driver to perform the mount process when it is called, and it passes the fd to the caller via UDS. The fusermount3-proxy is supposed to be used as a replacement for fusermount3. When the FUSE implementation calls the replaced fusermount3-proxy, it receives the fd just like fusermount3 and performs FUSE processing using that fd.

Fig.8 Mount procedure with fusermount3-proxy

Support Status for Various FUSE Implementations

In meta-fuse-csi-plugin, we have confirmed that we can use well-known FUSE implementations such as mountpoint-s3, goofys [14], s3fs [15], ros3fs [16], gcsfuse and sshfs [17] without any modification.

Table.1 Support status for FUSE implementations

| Does it work? | Mount approach | Works without modifications? | |

| mountpoint-s3 (S3) | ✔️ | fusermount3-proxy | ✔️ |

| goofys (S3) | ✔️ | fusermount3-proxy | ✔️ |

| s3fs (S3) | ✔️ | fusermount3-proxy | ✔️ |

| ros3fs (S3) | ✔️ | fuse-starter/fusermount3-proxy | ✔️ |

| gcsfuse (GCS) | ✔️ | fusermount3-proxy | ✔️ |

| sshfs (SSH) | ✔️ | fuse-starter/fusermount3-proxy | ✔️ |

Example: Using meta-fuse-csi-plugin with mountpoint-s3

In meta-fuse-csi-plugin, all configurations are described in the manifest to enable the use of the FUSE implementation desired by the user without using MutatingWebhook. The following manifest is for mounting using the fusermount3-proxy and mountpoint-s3.

apiVersion: v1

kind: Pod

metadata:

name: mfcp-example-proxy-mountpoint-s3

namespace: default

spec:

terminationGracePeriodSeconds: 10

containers:

- name: minio

image: quay.io/minio/minio:latest

command: ["/bin/bash"]

args: ["-c", "minio server /data --console-address :9090"]

- name: starter

image: ghcr.io/pfnet-research/meta-fuse-csi-plugin/mfcp-example-proxy-mountpoint-s3:latest

imagePullPolicy: IfNotPresent

command: ["/bin/bash"]

args: ["-c", "./configure_minio.sh && mount-s3 test-bucket /tmp --endpoint-url \"http://localhost:9000\" -d --allow-other --auto-unmount --foreground --force-path-style"] # "--auto-unmount" forces mountpoint-s3 to use fusermount3

env:

- name: FUSERMOUNT3PROXY_FDPASSING_SOCKPATH # UDS path to connect to csi driver

value: "/fusermount3-proxy/fuse-csi-ephemeral.sock"

- name: AWS_ACCESS_KEY_ID

value: "minioadmin"

- name: AWS_SECRET_ACCESS_KEY

value: "minioadmin"

volumeMounts:

- name: fuse-fd-passing # dir for UDS

mountPath: /fusermount3-proxy

- image: busybox

name: busybox

command: ["/bin/ash"]

args: ["-c", "while [[ ! \"$(/bin/mount | grep fuse)\" ]]; do echo \"waiting for mount\" && sleep 1; done; sleep infinity"]

volumeMounts:

- name: fuse-csi-ephemeral # mounting volume provided by meta-fuse-csi-plugin

mountPath: /data

readOnly: true

mountPropagation: HostToContainer # propagating host-side mount to container-side

volumes:

- name: fuse-fd-passing # dir for UDS

emptyDir: {}

- name: fuse-csi-ephemeral # volume with meta-fuse-csi-plugin

csi:

driver: meta-fuse-csi-plugin.csi.storage.pfn.io

readOnly: true

volumeAttributes:

fdPassingEmptyDirName: fuse-fd-passing

fdPassingSocketName: fuse-csi-ephemeral.sock

Manifest for mounting mountpoint-s3 with fusermount3-proxy

The starter container is a container that includes mountpoint-s3, and the busybox container is a container that includes a volume that is mounted via mountpoint-s3.

The starter container includes fusermount3-proxy, and the “–auto-unmount” is specified as a workaround to enforce the use of fusermount3 on mountpoint-s3. The environment variable FUSERMOUNT3PROXY_FDPASSING_SOCKPATH specifies the UDS that fusermount3-proxy uses for communication with the CSI driver. Other parameters specify the access token needed for bucket usage. The specified volumes include an empty directory where the UDS for communication with the CSI driver is placed.

In the busybox container, the mount point for mountpoint-s3 is specified. As mentioned above, mount(2) is executed again on the host-side after the container starts, so “mountPropagation: HostToContainer” is specified in volumeMounts to propagate the mount.

For volumes, an emptyDir for placing the UDS and a volume provided by CSI driver are specified. For volumeAttributes, the directory path and name for UDS are specified.

By deploying the above manifest to a Kubernetes cluster where the meta-fuse-csi-plugin has been deployed, users can freely use the FUSE implementation they want to use within their Pods without any special privileges.

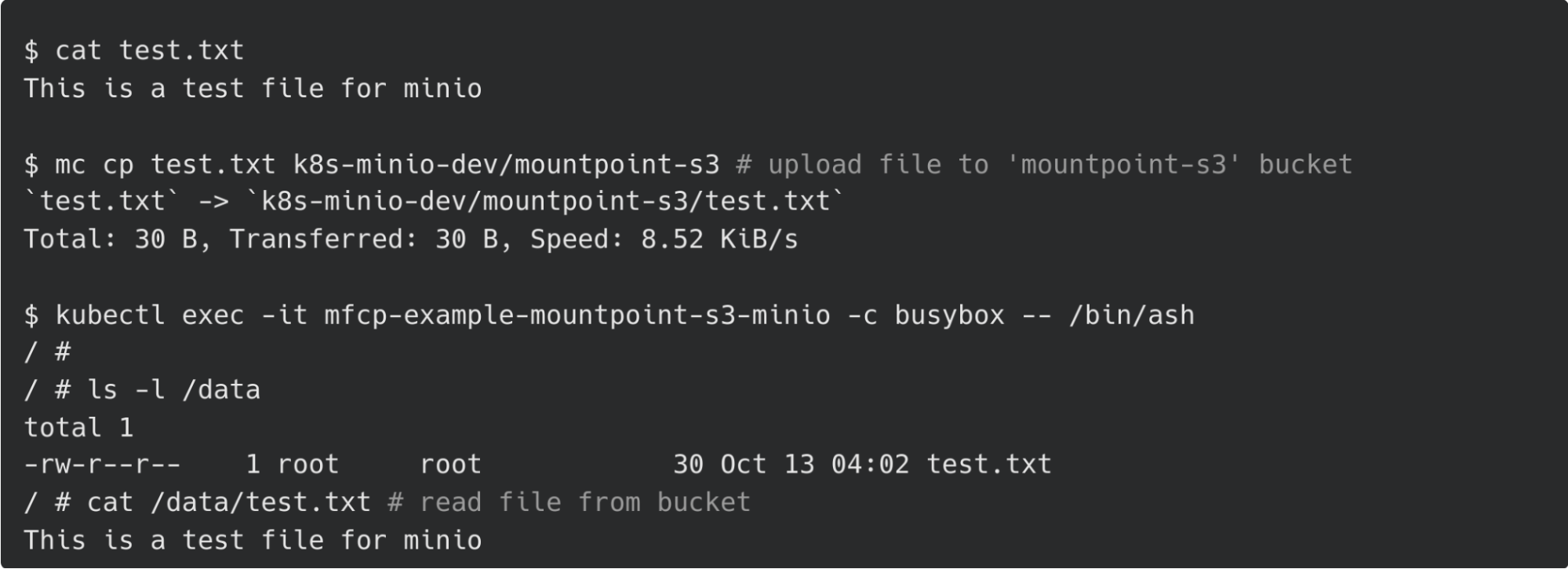

Fig.9 Reading files mounted by mountpoint-s3 in user’s Pod

Future Prospects

We developed meta-fuse-csi-plugin as a CSI driver that allows users to freely use any FUSE implementation whatever they want. Although we confirmed that it works with various FUSE implementations, there are two concerns for practical use.

The first one is security. We haven’t done enough surveys about security risks on passing a file descriptor opened by a privileged process to a non-privileged process.

The second is the inspection about the stability. We need to investigate if users use unstable FUSE implementation or perform malicious operations, whether it would adversely affect other Pods and the entire cluster or not. Although the meta-fuse-csi-plugin is designed to be as simple as possible, such inspection on what side effects can be potentially caused in abnormal cases is necessary.

With the meta-fuse-csi-plugin, operations similar to regular file systems have become available by using existing implementations such as mountpoint-s3 for object storage. For our distributed cache system, it has its own API, so we cannot use existing implementations and new FUSE implementations are necessary. Even if users prepare such an implementation on their own, it is expected that they can freely use it within our Kubernetes cluster by using meta-fuse-csi-plugin.

Acknowledgments

In the development of meta-fuse-csi-plugin, I gained a deeper understanding of the Kubernetes ecosystem and CSI drivers. By incorporating needs from those who actually use Kubernetes clusters, I was able to develop a more practical CSI driver. The internship period passed by like a flash, and it was a very fulfilling experience. Through interactions with other intern students and employees, I was able to broaden my perspective not only in my own field but also in other areas.

I greatly appreciate my mentors Mr. Ueno and Mr. Uenishi, as well as the Cluster Service and Storage Team for my daily work and discussions. I would like to take this opportunity to express my appreciation to them.

From mentors

I am Yuichiro Ueno, who is developing/operating our machine learning platform with Kubernetes. The purpose of this internship is to investigate how to use many FUSE implementations on Kubernetes. This is already done by gcs-fuse-csi-plugin, but it cannot be ported to other implementations yet. I think developing a good interface of CSI Plugin that supports many FUSE implementations but, Mr. Matsumoto developed a new method called fusermount3-proxy, to make it possible to use many FUSE implementations on Kubernetes.

PFN not only researches machine learning and deep learning itself, but also researches & develops our own machine learning platform with distributed cache system and object storage systems. If you are interested, we look forward to hearing from you.

References

[1]: “2022年のPFNの機械学習基盤 – Preferred Networks Research & Development”, https://tech.preferred.jp/ja/blog/ml-kubernetes-cluster-2022/

[2]: “A Year with Apache Ozone – Preferred Networks Research & Development”, https://tech.preferred.jp/en/blog/a-year-with-apache-ozone/

[3]: “Two Years with Apache Ozone – Preferred Networks Research & Development”, https://tech.preferred.jp/en/blog/two-years-with-apache-ozone/

[4]: “深層学習のための分散キャッシュシステム – Preferred Networks Research & Development”, https://tech.preferred.jp/ja/blog/distributed-cache-for-deep-learning/

[5]: “Apache Ozone”, https://ozone.apache.org/

[6]: “GitHub – aws/aws-cli: Universal Command Line Interface for Amazon Web Services”, https://github.com/aws/aws-cli

[7]: “What is SDK? – SDK Explained – AWS”, https://aws.amazon.com/what-is/sdk/

[8]: “GitHub – awslabs/mountpoint-s3: A simple, high-throughput file client for mounting an Amazon S3 bucket as a local file system.”, https://github.com/awslabs/mountpoint-s3

[9]: “FUSE — The Linux Kernel documentation”, https://www.kernel.org/doc/html/next/filesystems/fuse.html

[10]: “GitHub – GoogleCloudPlatform/gcsfuse: A user-space file system for interacting with Google Cloud Storage”, https://github.com/GoogleCloudPlatform/gcsfuse

[11]: “Kubernetes CSI Developer Documentation”, https://kubernetes-csi.github.io/docs/

[12]: “Google Cloud Storage FUSE CSI Driver”, https://github.com/GoogleCloudPlatform/gcs-fuse-csi-driver

[13]: “GitHub – libfuse/libfuse: The reference implementation of the Linux FUSE (Filesystem in Userspace) interface”, https://github.com/libfuse/libfuse

[14]: “GitHub – kahing/goofys: a high-performance, POSIX-ish Amazon S3 file system written in Go”, https://github.com/kahing/goofys

[15]: “GitHub – s3fs-fuse/s3fs-fuse: FUSE-based file system backed by Amazon S3”, https://github.com/s3fs-fuse/s3fs-fuse

[16]: “GitHub – akawashiro/ros3fs: ros3fs is a Linux FUSE adapter for AWS S3 and S3 compatible object storages”, https://github.com/akawashiro/ros3fs

[17]: “GitHub – libfuse/sshfs: A network filesystem client to connect to SSH servers”, https://github.com/libfuse/sshfs

Area